Key findings from the 2019 DevTestOps Landscape Report

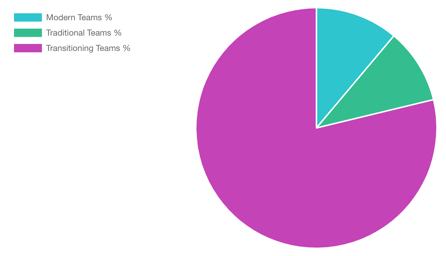

The first DevTestOps Landscape Survey is based on responses from a relatively small number within the software community. Respondents were likely to be people who are active in the software testing community, attending conferences, reading blog posts, researching the latest test automation tools. Most indicated that they were on teams transitioning away from traditional development where testing is done by a separate team with little or no automation and long release cycles. For me, that indicates that respondents represent the direction that testing and other software practices are going, to a modern, collaborative approach focused on frequent delivery of value to customers.

The full results of the report are viewable here, but if you just want my key takeaways, keep reading!

“Modern” versus “Traditional” practices

The majority of respondents work on teams with continuous integration (CI), with a significant number moving towards continuous delivery (CD) or continuous deployment (CD). This is in line with what I see in the software community. I was a bit surprised that most respondents are on teams that release between multiple times a day and monthly, which would fit with agile development.

DISTRIBUTION OF RESPONSES BY SOFTWARE DEVELOPMENT CULTURE

Customer happiness and product quality

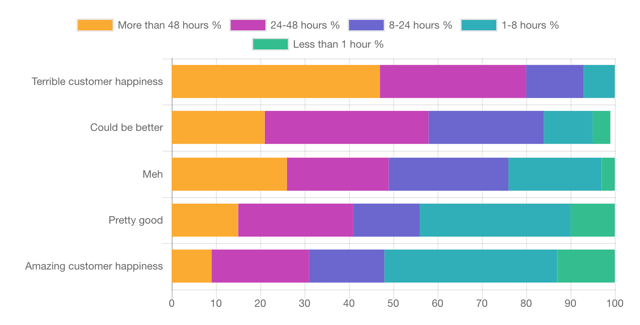

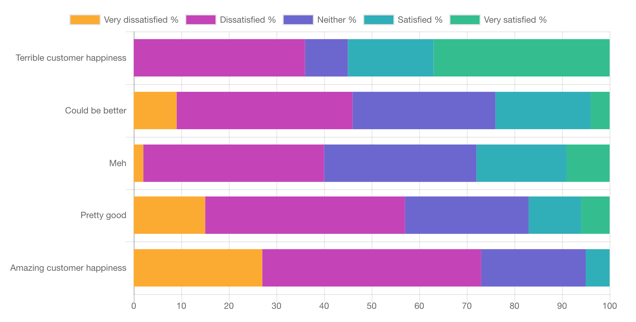

Teams with happy customers tended to be faster at repairing critical production bugs. No surprise there. What seems weird to me is that respondents who said they were satisfied with the tooling were more likely to have unhappy customers. That’s an odd relationship, and we can only speculate about what it means. It may be related to most respondents saying they are often on the lookout for new tools, practices, and strategies to improve product quality. If you never try to improve your toolset, maybe it’s because you’re spending all your time fighting fires in the form of production problems. Or maybe those teams are complacent about their tooling and other solutions, and don’t experiment to see if better tools and techniques would improve customer satisfaction?

LOWERING MEAN TIME TO REPAIR

POSITIVELY IMPACTS CUSTOMER HAPPINESSS

RESPONDENTS HAPPY WITH THEIR TOOLING

ARE MORE LIKELY TO HAVE UNHAPPY CUSTOMERS

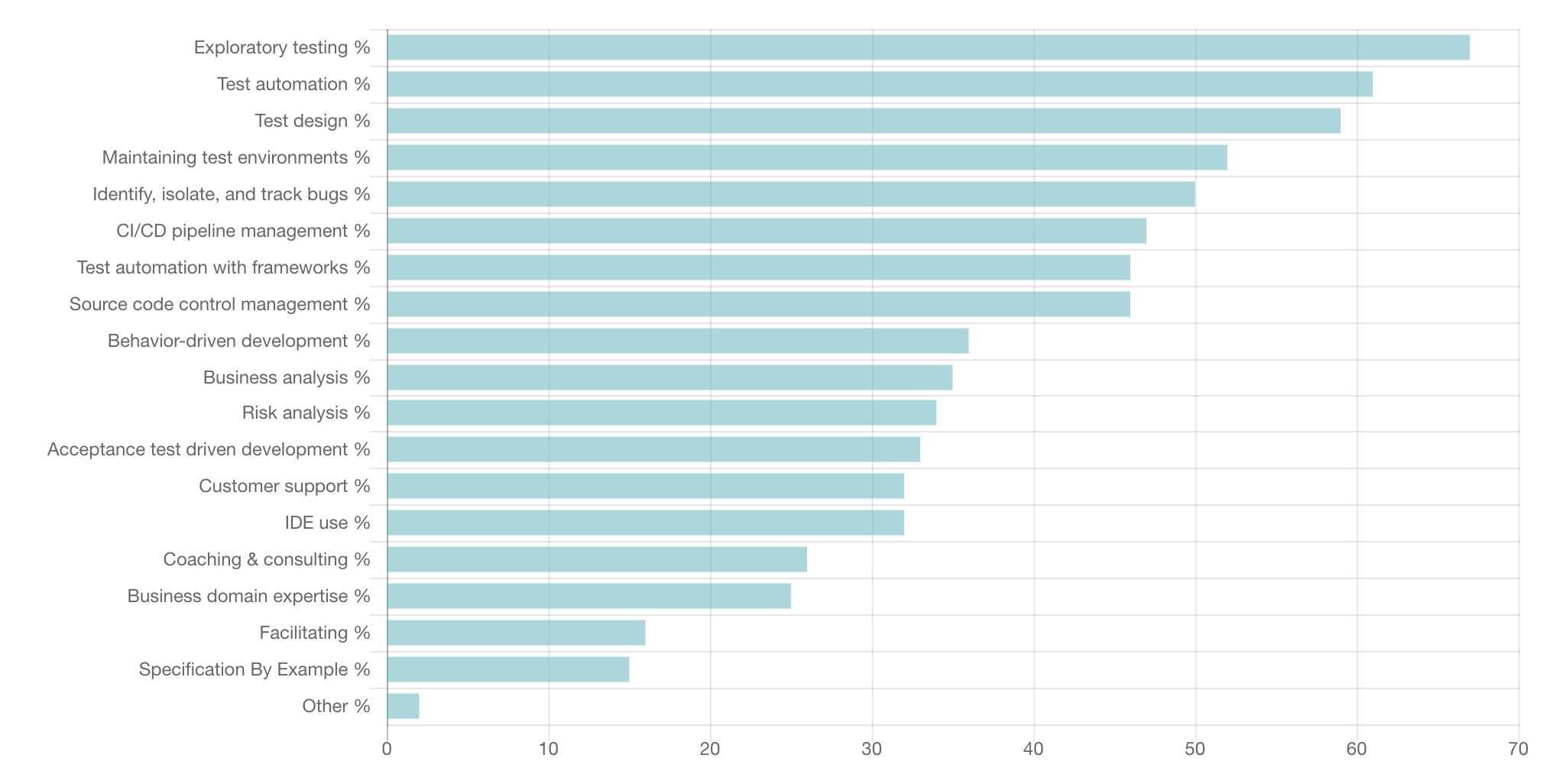

Popular testing activities

The most popular testing activity reported by survey respondents was: (drumroll) exploratory testing! Wow! A few years ago, many teams I encountered weren’t even familiar with exploratory testing. In the early days of agile development, teams focused on functional testing, and many did not see the value of having manual exploratory testing specialists on board. It’s gratifying to see that edge out test automation.

In hindsight, behavior-driven development (BDD), acceptance test-driven development (ATDD) and specification by example (SBE) should have been grouped together on the survey because they’re all basically the same activity: guiding development with business-facing tests. Given the high percentage of people doing BDD, and adding in significant percentages for ATDD and SBE, it’s clear that this is an established practice.

When we look at the results showing teams that do automation are less likely to find bugs in production after release, it’s fair to say that some of this is due to BDD/ATDD/SBE, which not only result in automated regression tests, but help the team build shared understanding of each feature they develop and more likely to deliver what the customer wants. The customer happiness levels for teams that automate versus those that don’t also may reflect the wide adoption of BDD/ATDD/SBE.

QA ACTIVITIES BY POPULARITY

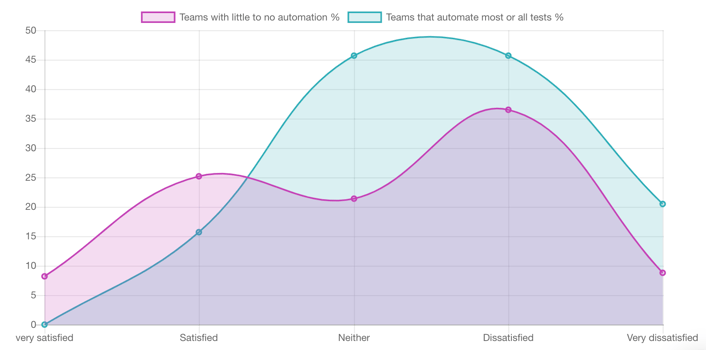

Test automation and satisfaction with testing

Survey results showed that the more automated tests a team has, the less satisfied its testers are with their testing process. It’s hard for me to draw conclusions from that. Some of it could be due to the tedious, time-consuming work of analyzing failures of “flaky” regression tests and trying to fix them. But manual regression testing is just as time-consuming and tedious. This is an area for future surveys to dig into. Certainly the survey shows that testers do the most work related to end-to-end tests.

TEAMS THAT AUTOMATE MOST OR ALL OF THEIR TESTS

ARE MORE DISSATISFIED WITH THEIR TESTING PROCESS

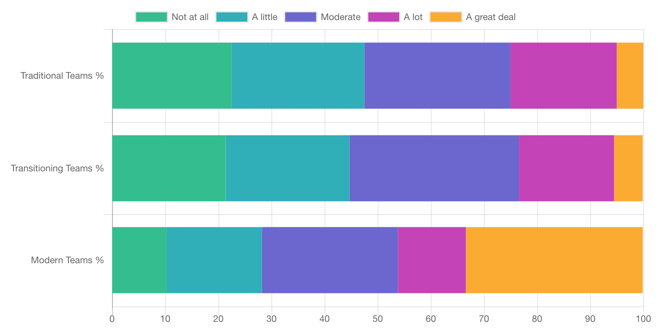

Stress and DevOps

Though so many people reported using up-to-date practices like CI, CD, test automation, and exploratory testing, most respondents are stressed out when it’s time to release. Continuous delivery is supposed to consist of, to quote https://continuousdelivery.com, predictable, routine affairs that can be performed on demand. Even more surprisingly, respondents on teams practicing DevOps were the most stressed. What the heck? The survey shows customers are happier with more frequent releases, and that teams using modern practices have happier customers, so why are the team members struggling at release time?

Again, I can only speculate. A lot of software practitioners think they’re doing “DevOps” because their organization has a “DevOps Team”, which is a bit of an anti-pattern when the idea is for all roles on the team to work together and not in silos. And frequent deliveries aren’t an indicator of success with agile development or DevOps, if the team isn’t working at a sustainable pace. I suspect a lot of respondents categorized their teams as using “modern” practices, when they’re actually suffering from various dysfunctions. A self-organizing team managing its own workload that is given time to learn and continually improve can release small changes often with little risk and thus little stress. A team being driven by management to release big changes every week or every day that has not mastered basic practices to support that will cut corners, build up technical debt, and just feel itself dragged down further into the abyss.

RESPONDENTS ON DEVOPS TEAMS

FEEL THE MOST STRESSED

Culture and performance

The survey showed activities like coaching and consulting, business domain expertise, and facilitating skills towards the bottom of the popularity scale. High-performing teams don’t happen by accident. They’re the result of a nurturing learning culture where they are supported by experts who can help them learn not only the technical tools to use but also the communication and collaboration skills they need to succeed together.

Accelerate: The Science of Lean Software and DevOps by Nicole Forsgren, Jez Humble and Gene Kim delves into the culture of teams based on the State of DevOps surveys. One conclusion: “people who feel supported by their employers, who have the tools and resources to do their work, and who feel their judgment is valued turn out better work.” People with high job satisfaction “get to make decisions, employing their skills, experience and judgment” instead of managing tasks.

I hope that future DevTestOps Landscape Surveys can help us learn more about job satisfaction levels of respondents, and get more detail on what contributes to high-stress releases. It’s clear that we have a community of curious people who are keen to learn about new tools and practices. Perhaps we’ll see that extending to team and cultural practices that contribute to job satisfaction and, subsequently, happier customers.

Check out the full report here for even more findings: https://www.mabl.com/devtestops-survey-results