Welcome to our series of articles introducing you to tried-and-true practices and patterns that help teams create valuable automated tests. Your team can apply these basic concepts to build up tests that quickly inform you about the outcomes of the latest changes to your software product, with a reasonable investment of time.

Powerful Test Automation Practices

Part 2: Great Expectations

In Part 1, we dreamed of the greatest test case ever, and listed elements that it would check with assertions to provide that all-important feedback: is our application behaving as desired? We examined how to apply good testing skills into the context of automation. Now, we’ll take a look at how to use those skills to formulate assertions that optimize feedback. Once you have started to consciously translate your natural scanning into detailed observations, you’re ready to create assertions.

A story of assertions triumphing over regressions

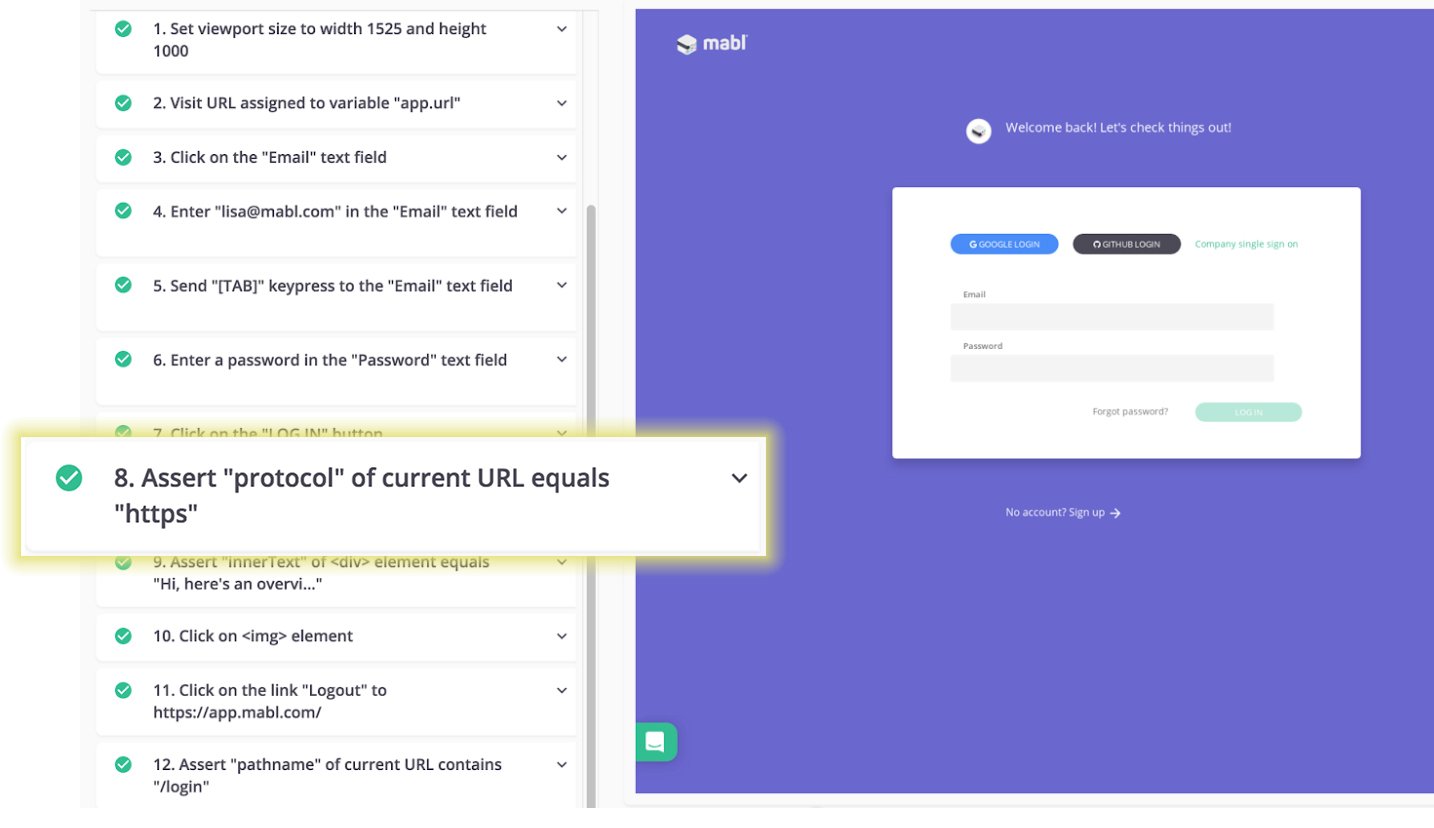

Let’s say, by some random coincidence, that we wanted to test an app called mabl. What would be the first thing you see? That would be the login screen.

Where to start

First, let’s plan what we want to test. The login page is a key part of user experience. Our team wants confidence that code or configuration changes don’t cause any important elements to go missing or functionality to break.

As a tester, you probably start by thinking what’s important about this page. When you explore it manually, you’re likely to look to see all the expected elements look correct, and that the most valuable functionality is present. The login capability is the page’s main purpose. There are four different ways to login : username/password, Google, GitHub, and single sign on integration. There are numerous failure scenarios for login, but we’re not going to address those in our first set of tests. yet.

Additionally, the page has marketing and branding elements that are important to the company. Our team worked hard to decide on the brand colors, company logo, and messaging to make the page both inviting and functional. Another key component is the icon in the bottom left corner that lets people get quick help via chat with customer support staff. We want to make sure all of these branding elements are present.

Prioritize on value

We’ve decided our priorities, we’ll start by verifying the following:

-

The customer support chat and marketing/branding elements are present

-

The “happy paths” for various ways of logging in work correctly

We’ll address more characteristics of good tests in a later post, but for the time being, let’s make a goal of creating tests that are short, that test one feature or aspect, and that are very intentional in their purpose. We’ll apply automation patterns such as “one clear purpose” , “keep it simple”, and “maintainable testware”. We want assertions that cause a test failure when an unexpected outcome occurs, and that help document the expected behavior of the user interface. We want to avoid assertions that fail for reasons that we don’t care about, for things that don’t affect value to our customers.

Balancing assertion strength and test maintainability

Strong, specific assertions may give us definite feedback that we haven’t caused regression failures. However, especially for teams practicing continuous delivery with frequent production deploys, web application pages are likely to change frequently in minor ways, such as rewording messages, changing colors or appearance that doesn’t affect functionality. The more specific we get with our assertions, the more likely they are to fail tests erroneously, because of a change that might not be important to us. However, if our assertions are too loose, the tests may indicate everything is fine when in fact a regression failure has occurred. Let’s look at some of the options along with guidelines on when to use them.

Equality versus Contains assertions

A common format for assertions is to compare the expected outcome, such as a text string value, with the actual outcome. For optimum test reliability, find the strictest condition that doesn’t over-specify.

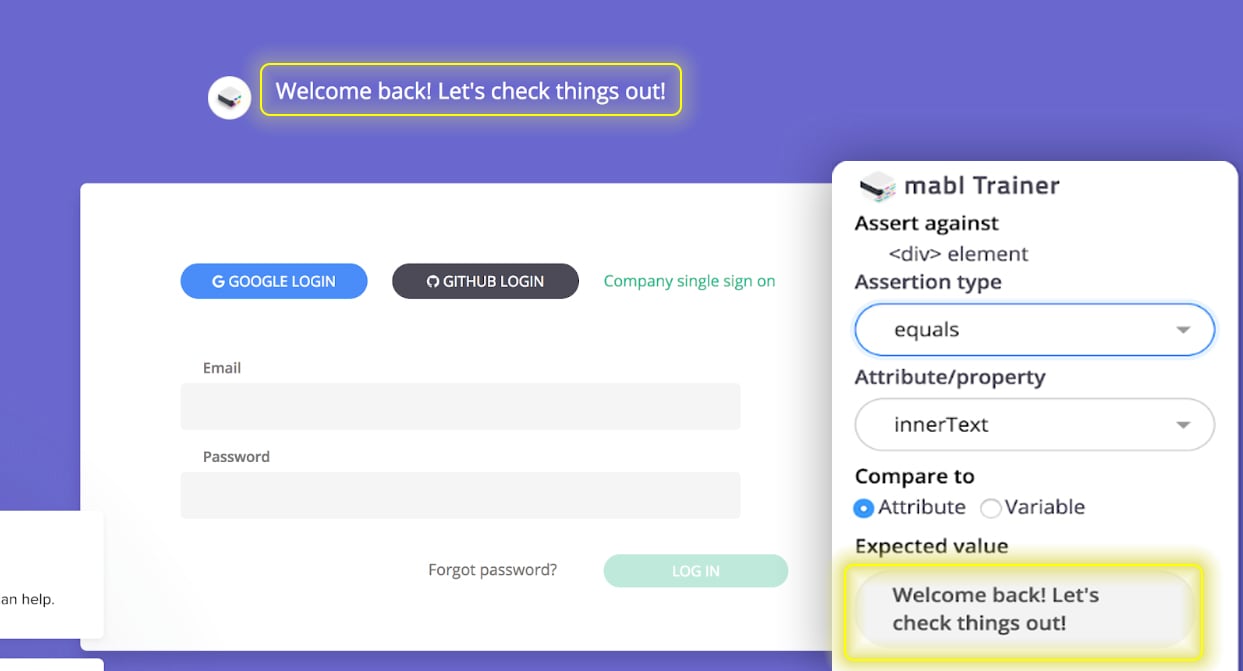

In the following example, we want to make sure there’s a friendly welcome message on the login page. We started with an equality assertion to assert the exact text of the message:

Next week when the designer decides to re-word the message to “Welcome! Let’s get this party started!”, the test will fail. If the value of the test is to make sure there IS a welcome message in the login page, regardless of its specific test, a less fragile approach would be to use a “contains” assertion:

As you create assertions in your UI regression tests, consider what might be tested more efficiently in a lower-level test. The specific text value for a message could be verified in a unit-level regression test which gets changed anytime the message text is changed in the code.

UI tests tend to be brittle, a subtle change can cause failures that we spend time investigating, only to find it was a spurious, unimportant failure. It’s a good practice to write UI tests in the least specific way.

Assertions that work in different environments

Ideally, our tests can run in any environment we have, including locally on a developer’s machine, in a test environment, in staging, or production. Use assertions that support this whenever possible. We also want to find the simplest, most straightforward way to assert that a condition we want to check is true.

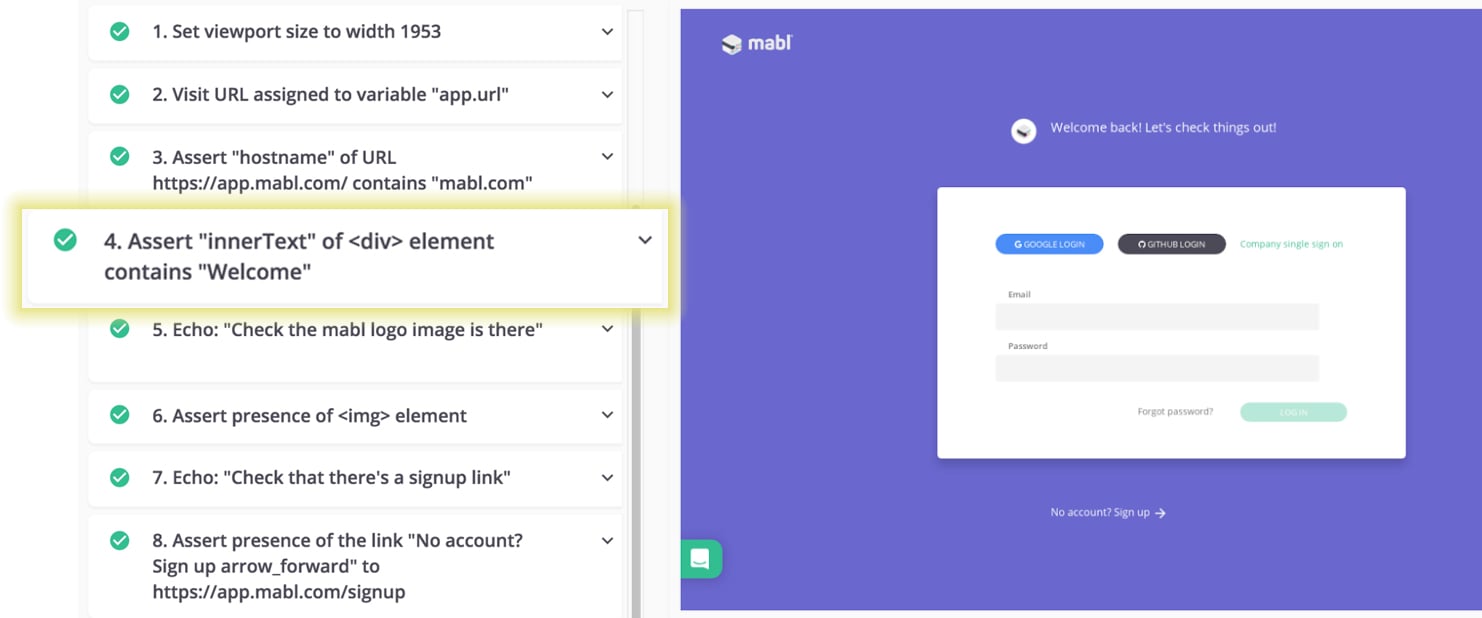

For example, we want a sanity check at the start of our test that checks marketing and branding elements to make sure we really are on the login page. We can do a simple assertion that the domain name in the URL is correct, without including the entire host name: That test is valid for other environments as long as we use the same naming convention, such as “test.mabl.com”.

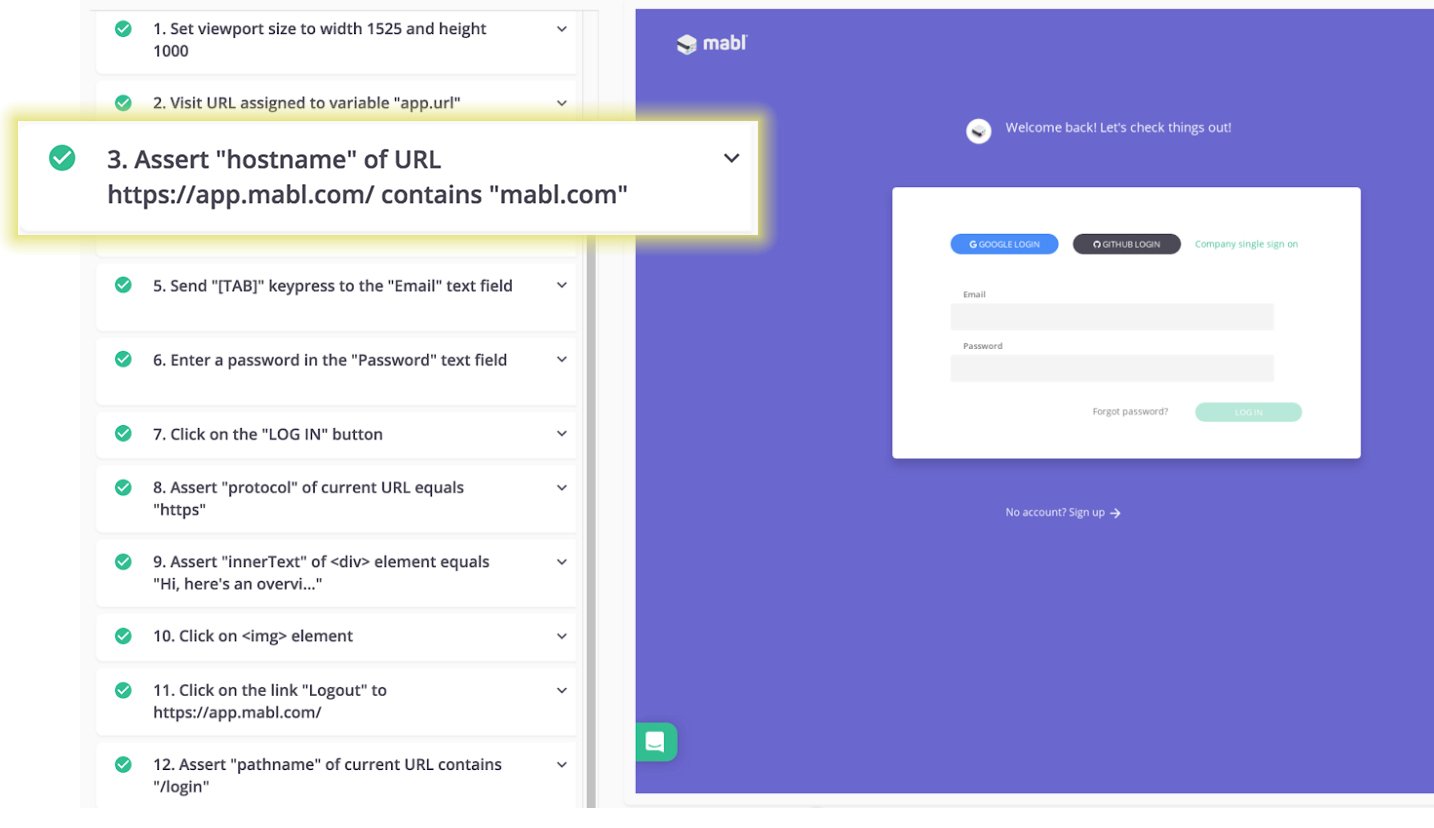

To make sure our app is serving a secure connection, we prefer a more specific assertion:

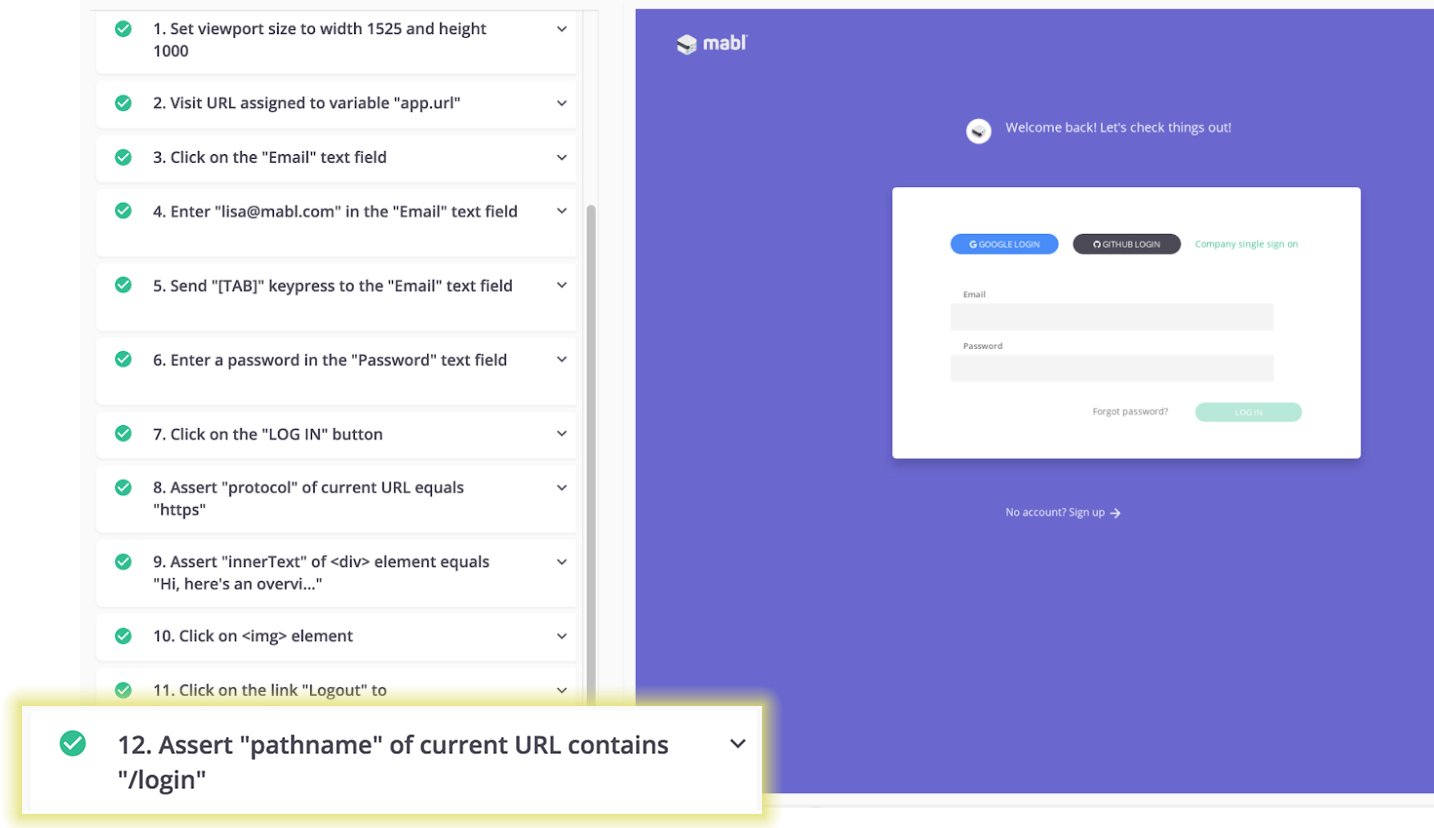

Here’s another example of supporting multiple environments with a less-specific but still useful assertion. We want to end our happy path login test by logging out and verifying the login page loads up. Again, by asserting on the pertinent part of the URL, we can get accurate feedback no matter where we run the test, be it dev, test, staging or production:

Other flexible but useful types of assertions

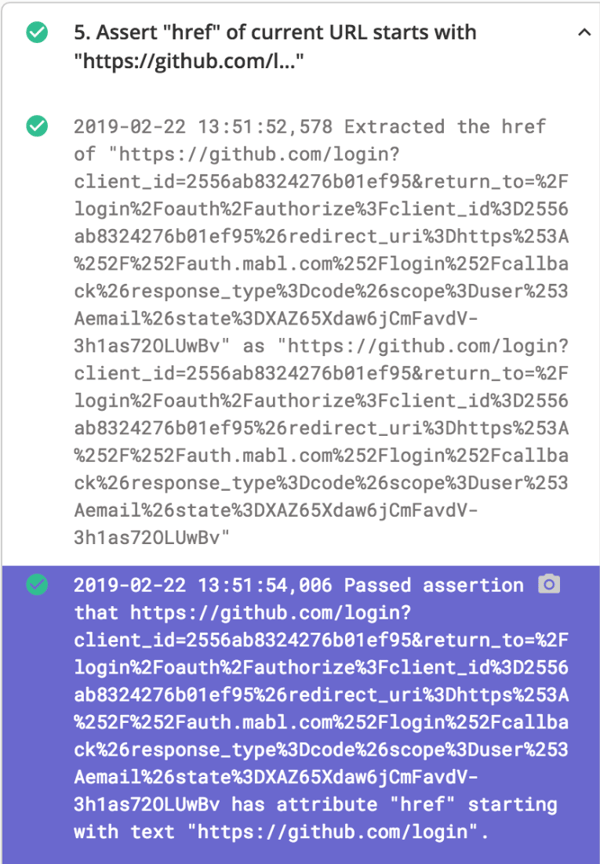

A variation on “contains” is “starts with”. Our test to login with GitHub clicks the “GitHub Login” button and asserts that indeed the GitHub login page has loaded. We don’t want to assert on the entire string, which contains values that may change, so we assert on just the start of the URL. The test output shows the entire value, but the assertion is only done against the starting value of https://github.com/login.

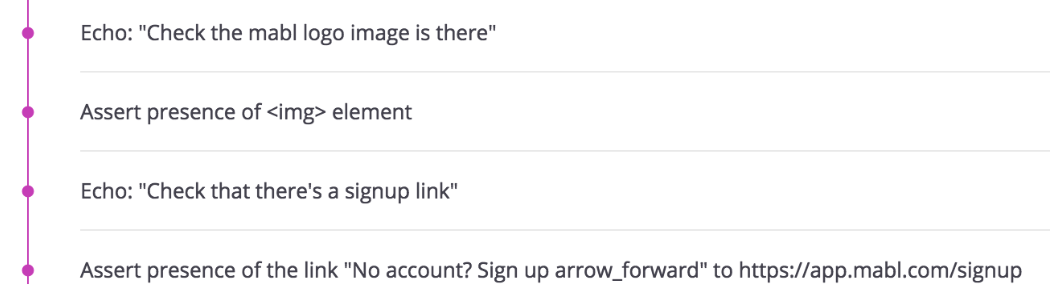

If we just want to make sure a particular button or image is on the page, it may be enough to check that it is exists on the page, without checking for details such as a text string value. That way our test will keep working if there are stylistic changes that don’t affect functionality. We assert its presence.

Optimizing feedback

We’ve looked at ways to write assertions that are flexible enough that they won’t identify minor intentional changes such as updated error message text verbiage as regression failures, yet still catch the failures we want to learn about. And, we’ve seen that our tests can easily be written to run on multiple environments.

Every context is different. If you’re writing a test case for a something where a particular undetected regression failure would be catastrophic, you’ll want a strong, specific assertion.

In our next installment, we’ll delve into some common pitfalls to watch for as you craft test assertions, such as false positives, double negatives, too many expected outcomes, and other assertion anti-patterns.

Resources

The Way of the Web Tester: A Beginner’s Guide to Automating Tests, Jonathan Rasmussen, 2016

Test Automation Patterns Wiki, https://testautomationpatterns.org