To say that people are excited about ChatGPT, and generative AI more broadly, would be an understatement. Few communities are embracing this technology with as much enthusiasm as the quality engineering world, where passionate testers are quickly finding new ways to harness large language models to improve product quality and the customer experience.

The mabl team has long recognized the potential of intelligence to supercharge test automation, and we share the QE community’s excitement for emerging tools like ChatGPT for automated software testing. For the curious mabl user, there are a number of ways to use low-code test automation and ChatGPT for fun and productivity today.

Harnessing the Power of Intelligence + Low-code Test Automation

Low-code and intelligence are natural partners in test automation. Both technologies can be harnessed to elevate the impact of software testing by helping quality professionals and developers reduce rote work. Less rote work means that everyone on your team can stay focused on building innovative, high-quality software that drives business success.

Create Scenarios for Data-Driven Testing

Have you ever wanted to increase test coverage by generating realistic data? Though mabl already supports this through our Faker integration, ChatGPT can help you generate a greater volume of realistic data for a wider range of functions based on your existing testing data. Let’s say, for example, that I had a test that signs up for a service using the following hard-coded values:

First Name: John

Last Name: Doe

Email: john.doe@acme.com

Street Address: 1600 Pennsylvania Avenue

City: Washington

State: DC

Zip: 20500

Employee ID: 222C01-01

I used a simple prompt to generate realistic scenarios in ChatGPT:

Based on this, generate 10 alternatives in CSV format. Use random values for each field.

First Name: John

Last Name: Doe

Email: john.doe@acme.com

Street Address: 1600 Pennsylvania Avenue

City: Washington

State: DC

Zip: 20500

Employee ID: 222C01-01

The results were striking; ChatGPT quickly generated 10 additional name/address/ID combinations based on the single example provided. The examples followed the same data formats and varied each field with a random value. But as is often the case, however, it took a few additional prompts to get the exact results I needed:

Convert to CSV format

Display that in code

Randomize the email domains

Add a column for birth date (just for good measure :)

Format birth date like 12-Oct-2023

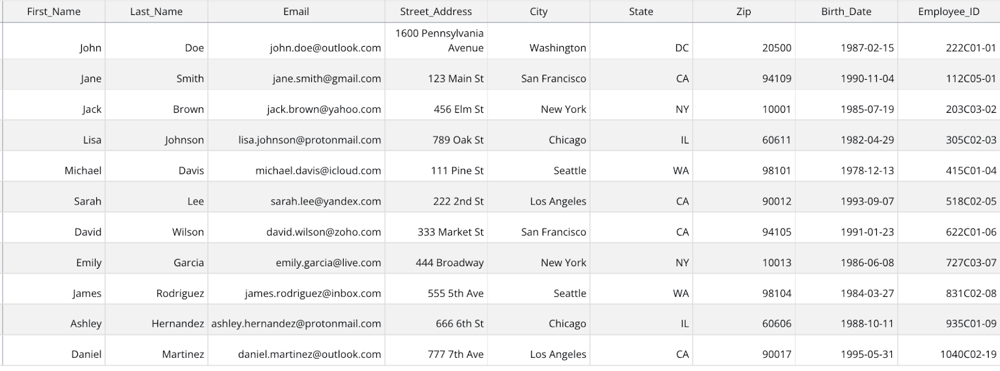

Once new data was generated, I simply needed to copy/paste the data into the text editor, save the file as a CSV, and upload it into mabl. Once that’s done, I had this datatable:

Example datable with ChatGPT-generated scenarios in mabl

The entire process took just a few minutes. Luckily, mabl made it easy to configure tests to use this datatable rather than hard-coded values, which increased test coverage. For even more impact, I introduced greater variation. For example, I wanted to add more international scenarios, so I used the following prompt:

Append three more alternatives with addresses and phone numbers in Japan and three more with addresses and phone numbers in the United Kingdom.

And in seconds, I had an updated dataset that introduced new variation in the format of zip codes, phone numbers, etc.

"Lottie","Miller","456 Elm Street, Chicago, IL 60601, United States","+1 312 345 6789","348r2389n33"

"Charlie","Wills","18 Maplewood Ave, London N11 6FG, United Kingdom","+44 20 8145 6789","348p2391t25"

"Charlize","Nakamura","2-18-25 Kichijoji, Musashino-shi, Tokyo 180-0004, Japan","+81 3 8745 1234","348r2392k35"

Write JavaScript Snippets

As a former software tester and developer, I appreciate how mabl combines the ease of low-code with the control of JavaScript snippets, which can be used to create tests for specialized or advanced scenarios.

ChatGPT does a great job of writing simple JavaScript from relevant prompts, including common automation use cases such as string manipulation, browser/API interactions, and more. Here are a few prompts I tried:

Write a JavaScript function that navigates back in the browser

Write a JavaScript function that uses a regular expression to extract the domain from a provided email address

Write a JavaScript function that returns the width of the current window

Write JavaScript that returns the current browser version

Write a JavaScript function that returns a date plus a specified number of days in a format like December 28th, 1984

This was ChatGPT’s response to the last prompt:

function addDaysAndFormat(daysToAdd) {

const today = new Date();

const futureDate = new Date(today);

futureDate.setDate(today.getDate() + daysToAdd);

const monthNames = [

"January", "February", "March", "April", "May", "June", "July",

"August", "September", "October", "November", "December"

];

const month = monthNames[futureDate.getMonth()];

const date = futureDate.getDate();

const year = futureDate.getFullYear();

const ordinal = date => {

if (date > 3 && date < 21) return 'th';

switch (date % 10) {

case 1: return 'st';

case 2: return 'nd';

case 3: return 'rd';

default: return 'th';

}

};

return `${month} ${date}${ordinal(date)}, ${year}`;

}

console.log(addDaysAndFormat(5)); // prints the date 5 days from today in the desired format

Not a bad start!

It’s exciting to see how ChatGPT and similar tools can be used to make automated testing faster and easier for more people.

The possibilities are endless. Just don’t forget to follow the callback format when you use the snippets in mabl.

Summarize a Test

ChatGPT is great at summarization. You can try summarizing a given mabl test by exporting the test steps in YAML format and pasting them into ChatGPT with an effective prompt.

I tried this prompt:

Summarize the following test based on the goals that it is trying to achieve

[pasted YAML from test export]

ChatGPT responded with the following:

The test script provided aims to achieve the following goals:The initial results were fairly comprehensive and detailed, but depending on your organization and needs, you’ll likely need to finetune your results. I used additional prompts to improve the summary, such as:

Validate the API test details by visiting the specified URL and logging into the application using the given credentials.Ensure the presence of specific elements in the user interface, such as the "New test icon" and the "playlist_play" button.Verify the contents and functionality of the API test by checking various text fields, links, and elements, such as the "GET /api/users?page=2 description", "Headers", "Body", and "Pre-request" links.Inspect the "Assertions" and "Script" sections of the test, ensuring that the correct text is displayed and the functionality is as expected, such as the presence of the "15. GET Delayed response simulation" test and the correct assertion messages.

The test script is designed to ensure that the API test is properly set up, with all required elements and functionalities working correctly.

Limit that to the top 3 goals

Limit that to 50 words

Say that in slang… I have to share that result:

Yo, this test script is all about: 1) checkin' if API test details are cool when loggin' in, 2) makin' sure those dope UI elements show up, and 3) givin' the API test a look-see to confirm it's all good with the right content and functions, ya know?

Specifying Selectors for Locating UI Elements

CSS and XPath queries are an additional option when locating page elements. If intelligent finds, auto-healing, and configured find steps have fallen short, you can use a CSS or XPath query as a last resort. In these cases, most users rely on Chrome’s built-in XPath/CSS lookup, but ChatGPT provides a useful alternative.

I found this initial prompt quite effective:

Provide an xpath selector for the "Start Free Trial" button in the following HTML

<div class="hero-main-text">

<p>Achieve fast and reliable end-to-end test coverage with 90% less effort</p>

<div class="buttons"><a href="https://www.mabl.com/trial-registration?var=hp_b" hreflang="en" class="button w-button">Start Free Trial</a><a href="https://www.mabl.com/product?var=hp_b" hreflang="en" class="button secondary-button w-button">Learn More</a></div>

</div>

Here was ChatGPT’s response:

The XPath selector for the "Start Free Trial" button in the given HTML is:

//a[text()="Start Free Trial"]

Pretty good! Let’s see what ChatGPT thinks about the CSS option:

Use a css query instead

…to which ChatGPT responded with a lesser option based on href.

button.w-button[href="https://www.mabl.com/trial-registration]

A fun experiment: asking ChatGPT which approach is better in terms of simplicity, or whether CSS or XPath selectors are better in general. You can engage ChatGPT to help you with selectors in a general sense, and even have a conversation on the pros and cons of different approaches. I started by providing a prompt and an HTML snippet:

Given this html, provide the 5 best selectors for locating the search box

[pasted HTML snippet]

ChatGPT responded with 5 options:

Here are 5 selectors that can be used to locate the search box:

input[type=search]: This selector targets any input element with type="search". In this case, it would match the search box input field.

.rm-SearchToggle: This selector targets the search button element with class rm-SearchToggle, which is the parent element of the search box input.

.Input.Input_md.SearchBox-InputUQZAW9QXMe-c: This selector targets the search box input directly, using its class names. It's a more specific way of targeting the input than using input[type=search].

button[aria-label=Search]: This selector targets the search button element using its aria-label attribute value, which is "Search".

#AppSearch .SearchBox-InputUQZAW9QXMe-c: This selector targets the search box input by combining the ID of its parent element (#AppSearch) with the class name of the input (SearchBox-InputUQZAW9QXMe-c).

Too many choices! So I asked for some help in narrowing the list down.

Which of those selectors is the most reliable?

Naturally, ChatGPT had an opinion on the topic and even some guidance on best practices…

The most reliable selector to locate the search box would be input[type=search] because it directly targets the search box input element using its type attribute, which is specifically designed for search inputs. This selector is less likely to change or be affected by changes to the HTML structure or class names.

However, it's always a good practice to use multiple selectors and consider the context of the search box to ensure that the selector will work in different situations. So it's a good idea to use a combination of selectors to make sure that the search box can be reliably located

Finally, I wanted to know if any of the selectors was a really bad idea, so I asked:

Which of the selectors is the least reliable option?

ChatGPT had some solid advice.

The least reliable selector to locate the search box would be .rm-SearchToggle. This selector targets the search button element, which is a parent element of the search box input field. It relies on the assumption that the search button will always be present and will always be a parent element of the search box input. If the HTML structure changes or the search button is removed or moved, this selector will no longer work.

Therefore, it's not recommended to use this selector on its own to locate the search box input. Instead, it can be used in combination with other selectors to locate the search box reliably.

What’s Next?

Like low-code, ChatGPT has the potential to save quality contributors time and effort. I hope these experiments inspire you to consider how ChatGPT can support your test automation strategy. If you discover any approaches that you’d like to share with the quality community, connect with us on LinkedIn or Twitter!