At CAST 2018, Ashley Hunsberger and I paired up to facilitate an all-day workshop 2018 called “The Whole Team Approach to Testing in Continuous Delivery”. A large part of the day was devoted to learning about deployment pipelines. For those of you who couldn't attend the live version, you can find the slides here. Continue reading below for an introduction to why deployment pipelines are vital to successful continuous delivery, and a fun exercise your team can use to improve your own pipeline.

Continuous delivery - scary?

Continuous delivery (CD) lets us deliver new value to production safely, quickly and sustainably (to paraphrase Jez Humble). Each new commit to our code base trunk or master is independently verified as a deployable release candidate. We can deliver frequently, which means the changes are small, reducing the risk of problems.

Still, CD can seem like a scary proposition. In the old waterfall days, we didn’t have enough time to complete all the testing activities we wanted. Agile development enabled more bi-weekly or weekly releases, and brought up new testing challenges. And now, how can testing possibly keep up with continuous delivery? How can we feel confident about each release, from a testing/customer support perspective?

New approaches

Following the principles of continuous delivery helps us build confidence in our deployments. Following modern testing principles can give us even more confidence. When the whole team takes responsibility for building quality into the product and continually improving how we work, we (and our customers) realize the benefits of CD.

This is a huge topic, but let’s look at two key factors for success with CD. Obviously, we need adequate automated regression test coverage to make sure that the things our customers really need are still working. That’s not new. And, it isn’t a complete safety net. We can have great automated regression test coverage, do thorough exploratory testing, verify performance, and all the other many types of testing before we release to production, although we are doing that in test environments. We know that behavior is likely to be different and unexpected in production.

So what is new? Today, we have the ability to capture and analyze all kinds of data (and huge amounts of it) about production use and immediately detect any errors that occur. This is important, but it’s not that helpful unless we can quickly and reliably deploy fixes to production. And to do that, we need a fast, reliable deploy pipeline.

Pipeline basics

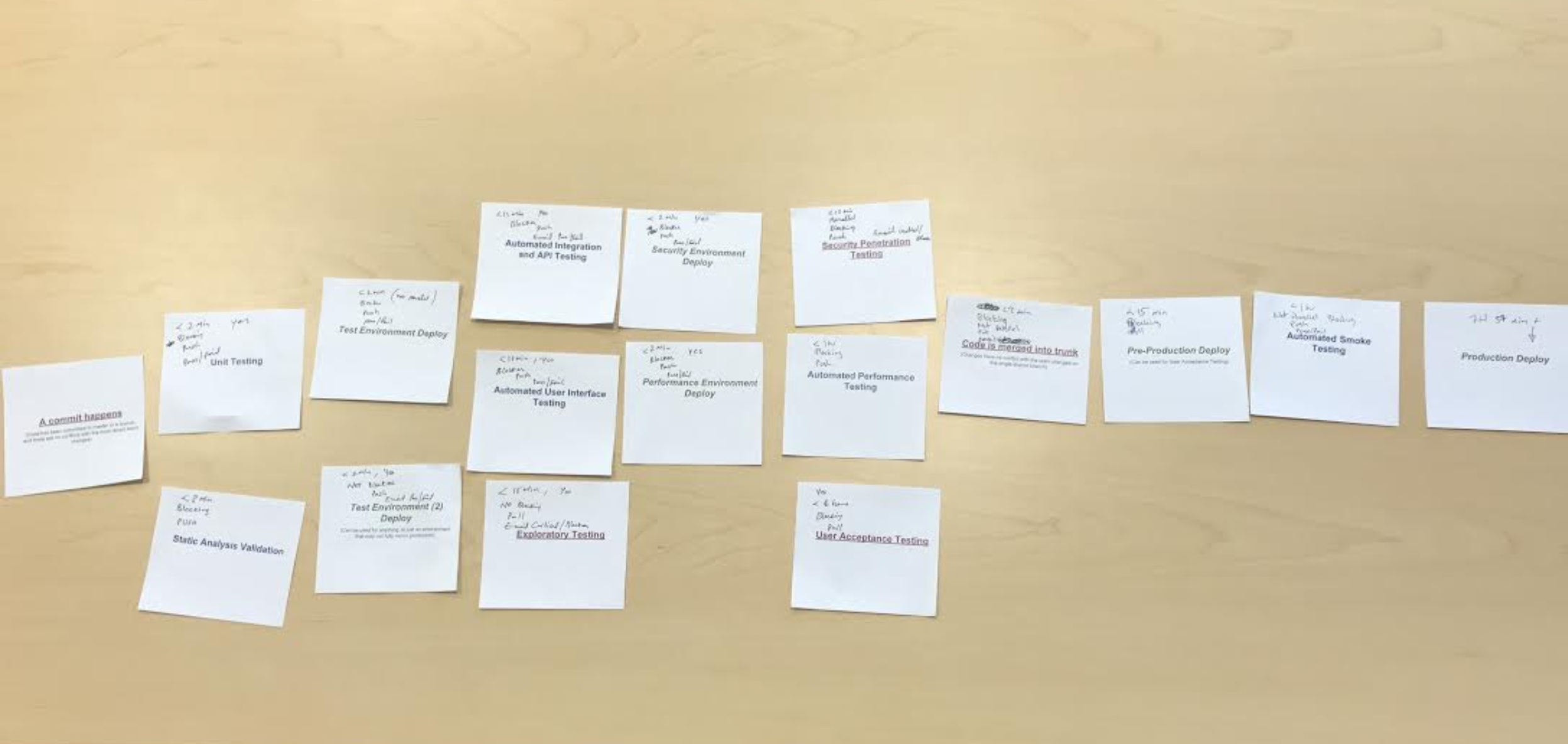

The deployment pipeline consists of steps that changes go through before they can be deployed to production. Every company, every team has their own unique pipeline. We break our build into stages or steps to speed up feedback. There’s a constant trade-off between fast feedback and confidence. The early steps of a pipeline are usually unit tests. They quickly show us whether anything is broken at that low, granular level. But we need more steps with higher-level, longer-running tests to feel more confident. Later steps in our pipeline exercise more complicated scenarios. We want fast feedback, but we may need comprehensive tests for confidence, and they take a long time to run. Here’s an example deployment pipeline:

.png?width=2488&name=Example%20deployment%20pipeline%20(created%20with%20Abby%20Bangser).png) Example deployment pipeline (created with Abby Bangser)

Example deployment pipeline (created with Abby Bangser)

In this example, the steps outlined in blue in this example are manual steps. We focus on automation when talking about pipelines, but manual steps are common. As in this example, activities like exploratory testing and security testing may be critical and may require manual work. We should be able to automate deploys to different environments, but perhaps your team just hasn’t gotten to that yet.

Things that slow down the pipeline

“Flaky tests” are a common problem even in completely automated pipelines. We often see this with user interface (UI) tests. Someone makes a change to the UI but forgets to update the automated tests to reflect that. A test environment slows down and causes a test step to time out. The browser version automatically (and unexpectedly) updates in the test environment. Those are just a few common causes of UI test failures.

Dependencies can also cause difficulties. A failure of one stage can stop multiple subsequent steps from running. Test data may fail to be set up properly, causing test failures. A test environment in the cloud might fail to spin up.

More steps in the pipeline give us more confidence, but they take more time. And how do we know all the steps are necessary?

When a pipeline experiences more and more delays, we humans have a tendency to increase the size of the items we’re sending through it to try to get more done. If the tests are failing and people keep committing changes, we’ve now got a big batch of changes which is more likely to include regression failures. It’s a death spiral. If our pipeline is reliable and fast, fewer changes go through it each time, reducing the risk of failures.

Collaborating to improve the pipeline

Making problems more visible is a great first step in making them smaller. Gather your team (or at least a representational group, if you have a large team) together. Take cards or paper and write the name of each step of your pipeline, one per card or paper. Put them on a table or on a wall to create a picture of your pipeline. (This can be done virtually if your team is distributed).

Visualizing a pipeline on a table - picture used with permission from Llewllyn Falco

Visualizing a pipeline on a table - picture used with permission from Llewllyn Falco

Next, look at each step in the pipeline and ask “why do we have this step?” What are we learning from it? What business questions does it answer, what risks does it mitigate? Who on the team benefits from this information? You can capture this information on sticky notes to attach to each step.

Then, think about dependencies. For each step, what must in place for it to run successfully? A test environment? Test data? Specific configurations? Also think about how you can optimize the step. Is it currently done manually? Can you automate it?

Identify what triggers each step. Is it triggered automatically by the success of a previous step? Or manually? Can it be run in parallel with one or more other steps? Do you see opportunities to shorten feedback loops? Again, record outcomes of these discussion for each step.

Look at the length of your feedback loops. How much time elapses from code commit to completion of each step? What happens if the step fails - do the right people get notified? How? Is there a better way to communicate alerts? My previous team found that everyone was ignoring the Slack alerts generated by pipeline failures, so they hooked the build up to a police light. That got the team’s attention so that failures were quickly investigated and addressed!

Once you’ve gathered information about each step in the pipeline, choose a pain point to address and think of an experiment to reduce the pain. For example, perhaps your UI test suite has a lot of flaky tests and the team spends a lot of time kicking tests off again and diagnosing the failures that reoccur. Your experiment might be, “We believe that by refactoring the top 10 flaky tests to make them reliable will reduce our pipeline failure rate by 10% in the next month”. Use your retrospectives to evaluate your progress.

Remember the benefits

A fast, reliable pipeline means that you can confidently deploy frequent, small changes to production. And if you introduce problems to production that cause customer pain, you can shorten the time they feel pain by quickly deploying a fix with your fast, reliable pipeline. There are many other factors that go into safe, reliable and sustainable continuous delivery. Fast feedback and the ability to deploy changes quickly are a cornerstone of successful CD.

References:

A Practical Guide to Testing in DevOps, Katrina Clokie, 2017

Continuous Delivery, Jez Humble and David Farley, 2010

More Agile Testing: Learning Journeys for the Whole Team, Chapter 23, Lisa Crispin and Janet Gregory, 2014