I was lucky enough to participate in the Association for Software Testing’s annual conference, CAST2018, the week of August 6. Ashley Hunsberger and I facilitated an all-day tutorial on the Whole Team Approach to Testing in Continuous Delivery with a highly engaged, creative, international group of 28 testers and developers.

The best part of any conference is learning, and being inspired with new ideas and perspectives. I’d like to share some of the insights I gained from the excellent workshops and talks I attended.

Seeing patterns that aren’t there

Liz Keogh always sparks new perspectives for me. In her opening keynote, “Cynefin for Testers”, she talked about how we can deal with uncertainty and risk. One way we, as humans, attempt to cope with an uncertain world is by seeing patterns where they don’t exist. There are so many cognitive biases which may have helped us in more primitive conditions, but often don’t serve us well now.

As we develop new software features, we often jump to conclusions that we “know” how a new features should behave. We run with it, we write code and automate tests, we proudly show it to our customer - only to learn that we made incorrect assumptions and now we have to do re-work.

Building shared understanding

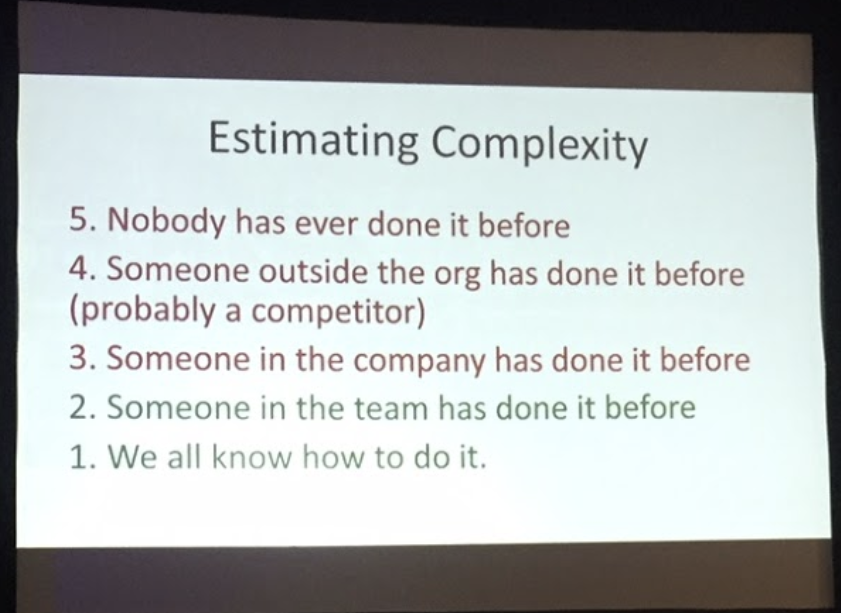

How do we overcome these biases? It sounds simple - though we know “simple” doesn’t always mean “easy”! Liz recommends having conversations. She noted that testers have excellent deliberate discovery skills. When testers, developers, product folks and others get together and talk about a feature, we can learn just how complex a proposed new feature might be, and act accordingly. In Liz’s scale, complexity can range from “We all know how to do this” - so obvious you say “Like this, duh” - to “Nobody has done it before” - a differentiator, like the very first camera in a mobile phone.

We can’t really “test” a highly complex new feature, but we can use exploration by example to learn about it. We can use examples and user scenarios to reduce the complexity, we can have the important conversations that build shared understanding of how the feature should behave, what value it can give customers. Behavior-driven development (BDD) is one practice that helps us do that. We turn examples into executable tests at the unit and API/service level, automate them, and use them to guide writing the code that makes those tests pass. Now, we can deliver even a truly innovative new feature with a minimum of rework. The resulting automated test suite provide living documentation of how the system behaves as well as protection against regression failures.

When to wait for more certainty

Liz recommends waiting until user interface (UI) uncertainty settles down before trying to automate UI tests. Again, we want to minimize re-work. Keep having conversations until you understand the feature and its value well. In my experience, guiding UI development by testing lo-fi mockups and prototypes is effective in getting to the final design with less wasted effort.

I’ve found Mike Cohn’s Test Automation Pyramid model helpful in most contexts. Pushing test automation to the lower levels, where tests run faster, are cheaper to maintain and generally more reliable than UI level tests, has worked well for my teams. But I think for most web-based apps, we do need some UI level automated regression testing. Lots of regression failures can only be caught when going through all the layers of the application.

I’ve worked on teams who successfully did BDD at the UI level. After listening to LIz’s keynote, I’m considering the idea to manage uncertainty by diligently automating as many scenarios as possible below the UI, then wait until the UI design is fully formed before automating those UI regression checks. I want to experiment with that, I expect in the long run it could save time and frustration.

(While we’re on the subject of cutting down the time spent on UI test automation, it’s exciting to see the potential of applying technologies like machine learning to make tests way faster, more robust and reliable. We can take advantage of the cloud without security concerns too.)

By automating all these regression tests using leading practices and tools for valuable, low-maintenance tests, testers’ time is freed up so they can add value that makes a difference - by bringing their unique perspectives to those early product and feature conversations. Testers see patterns others don’t see, we ask questions others don’t think to ask. The reward comes when someone says “Wait - that’s not right” while we’re discussing how a feature should work - before we spent time writing code and tests!

Accentuate the positive

Liz urged us to focus on positive messages. Start the ball rolling with small changes. Whatever happens, use the “Yes, and…” technique from Improv to keep building on the positive. Tell stories about the feature. Amplify the good stories. Since many of us testers often have to deliver bad news about the products we’re testing, we need to keep this at the front of our minds.

According to Liz, testers are the vanguard of Devops. Let's embrace that!![]() Don’t ask “how can we make things predictable” - that’s not how innovation happens. Instead, ask “How can we cope with the unpredictability. We can’t remove all the uncertainty, we can’t mitigate every risk, we can’t be “failsafe”. But we can make it safe to fail when trying to deliver a new feature. Every failed experiment helps us figure out where to go next.

Don’t ask “how can we make things predictable” - that’s not how innovation happens. Instead, ask “How can we cope with the unpredictability. We can’t remove all the uncertainty, we can’t mitigate every risk, we can’t be “failsafe”. But we can make it safe to fail when trying to deliver a new feature. Every failed experiment helps us figure out where to go next.

I’ve talked a lot over the years about changing our mindset from “bug detection” to “bug prevention”. Of course we want to catch bugs in code, but it’s even more important to prevent them. We need strong relationships among testers, developers, operations, product, business stakeholders, customer support and more to navigate uncertainty. If we take advantage of testers’ unique perspectives, we can deliver valuable new features in our uncertain world, confident that we’ll delight our customers.

More to come!

Liz’s keynote was just the beginning of my CAST learning journey. I’ll share more insights on managing risk, hiring excellent testers, including neurodiverse ones, and more in future posts.