How testing processes change when you “shift left”

In “Shift left, shift right - what are we shifting, and why”, I explained some of the differences in the linear software development used in phased-and-gated approaches, and the “test early, test often, test in production” approach that contemporary agile and DevOps teams practice. Shifting “left” doesn’t mean that we spend a huge amount of time specifying detailed requirements up front, and shifting “right” doesn’t mean we just throw our code over the wall into production and let customers find our bugs.

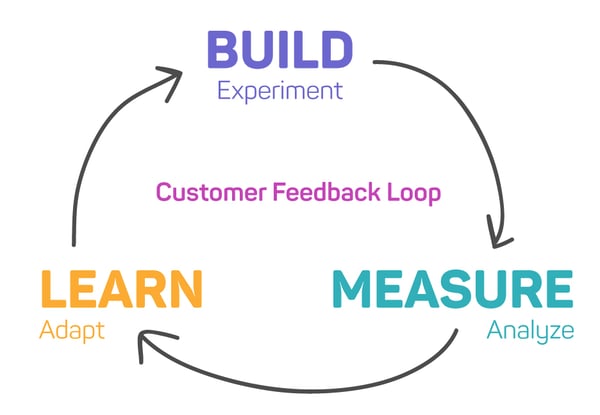

Illustration from “Continuous testing in DevOps”, Dan Ashby, https://danashby.co.uk/2016/10/19/continuous-testing-in-devops/

Shifting left (and right) means getting everyone on the delivery team engaged in testing activities. Testing changes from a scramble right before release to something your team talks about and does every day. Let’s look at some healthy changes we make to our testing processes when we adopt a continual, holistic testing approach.

Testing feature ideas

As the product team and our delivery team learn how our customers use our product and what value they are still seeking in it, we get new ideas for features. In waterfall, the product design team would wait until they had a critical mass of new features, then start a long process of designing them. Testers might not have even be aware that new features were underway. In modern software development, we can test new feature ideas right away, by asking questions like:

-

What’s the purpose of the feature? What problems does it solve for our customers? Our business?

-

How will we know this feature is successfully meeting customer needs, once it is in production?

-

What’s the smallest slice of this feature we can build and use as a “learning release” to make sure it has value?

-

What’s the worst thing that could happen when people use this feature? What’s the best?

Using tests continually to guide development

In the “bad old days”, testing was left to the end of the project after the code was “frozen”, that is, no new features could be added in. No testing was allowed before that, because it was seen as “wasteful” to test the same code more than once. The QA team had a mad scramble to test as much as they could before the delivery deadline, rarely testing enough. When we test throughout the cycle of delivery, discovery, and more delivery, we build quality into the code and avoid unpleasant surprises right before release.

Here’s a typical “shift left” scenario: Your team has decided to build Feature ABC. The product owner has written an epic for it and the team has sliced that into small, testable stories. You’re having a specification workshop or a discovery session with three amigos (or more) to discuss the stories before the planning session with the whole delivery team. I’ve found that kicking this type of discussion off by asking “How will we test this?” leads to a productive discussion. We can talk about how to test a feature and its individual stories at all levels including unit, service level/API, UI, or other levels as appropriate. In my experience, I’ve found this helps the team design testable code, which leads to more reliable code that is easier to understand and maintain.

What about test plans?

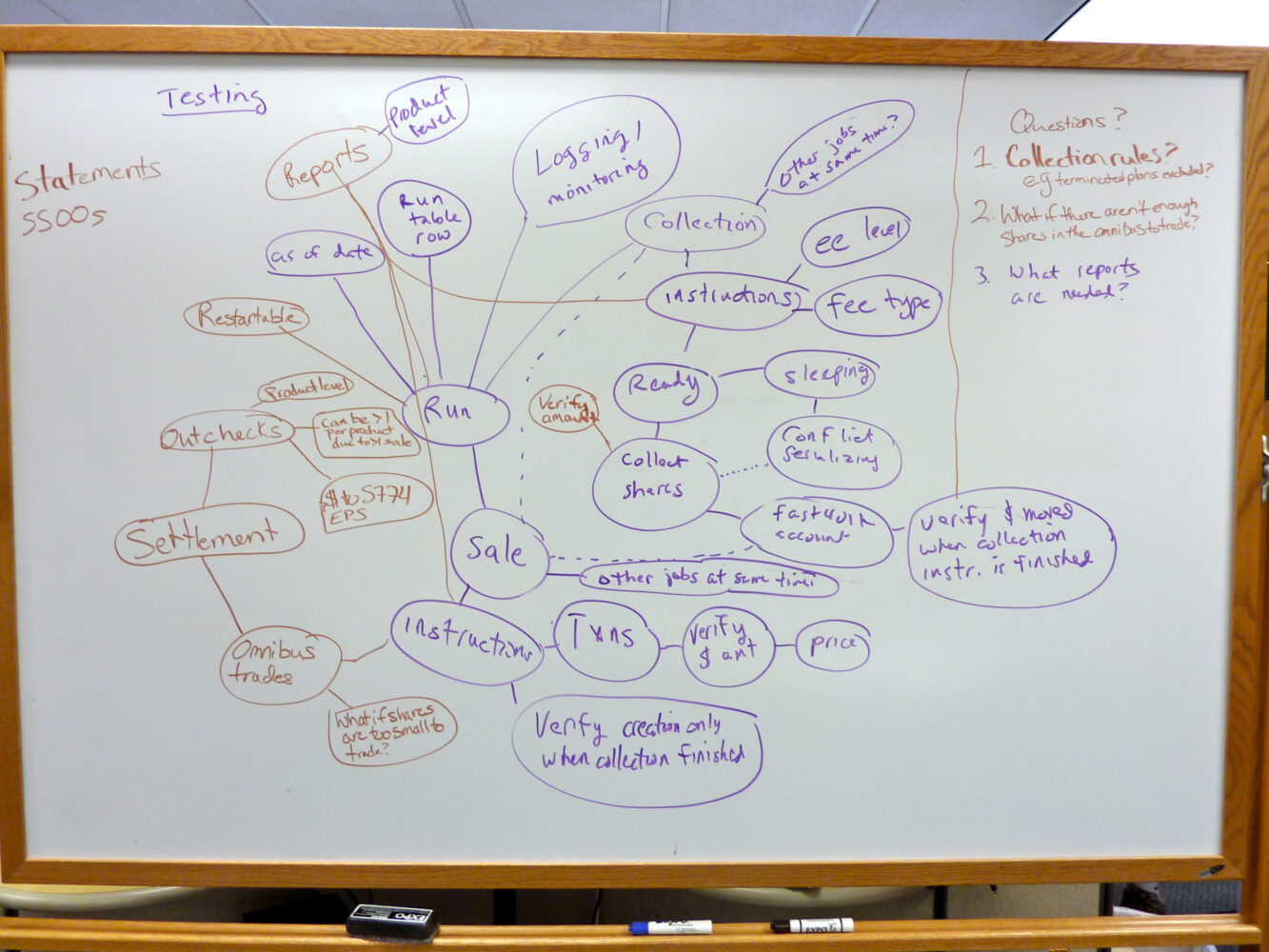

For most business domains, the days of heavyweight test plans are over (it’s questionable how many people ever read them anyway!). Many teams find mind maps are a better solution for planning and tracking testing. A simple one-page test plan can also work well. So can a test matrix written on a whiteboard! Experiment with different formats to see what works best for your team.

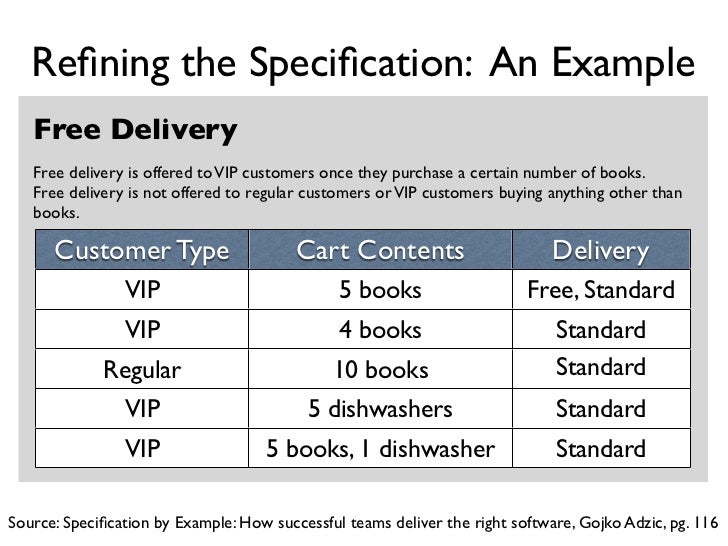

Instead of formal test plans, I like to capture a few examples of desired and undesired behavior for each story as we discuss them in our “amigos” meetings. The team can turn those into executable tests that guide development. Once the feature is in production, these tests become living documentation of how it works, as well as automated regression tests to make sure future changes don’t break it. My teams found that as we built each feature, we thought of more test cases to automate or explore manually, which is one of the ways to improve your chances to deliver exactly what your customers want.

Test early and often, automate early and often

Models such as the test automation pyramid help guide discussions around how to automate various types of tests. Sometimes when my team discussed how to automate testing for a particular feature at different levels, the developers said it would be easier to test-drive their code with API-level tests. Other times they could cover everything at the unit level and we didn’t need higher level tests.

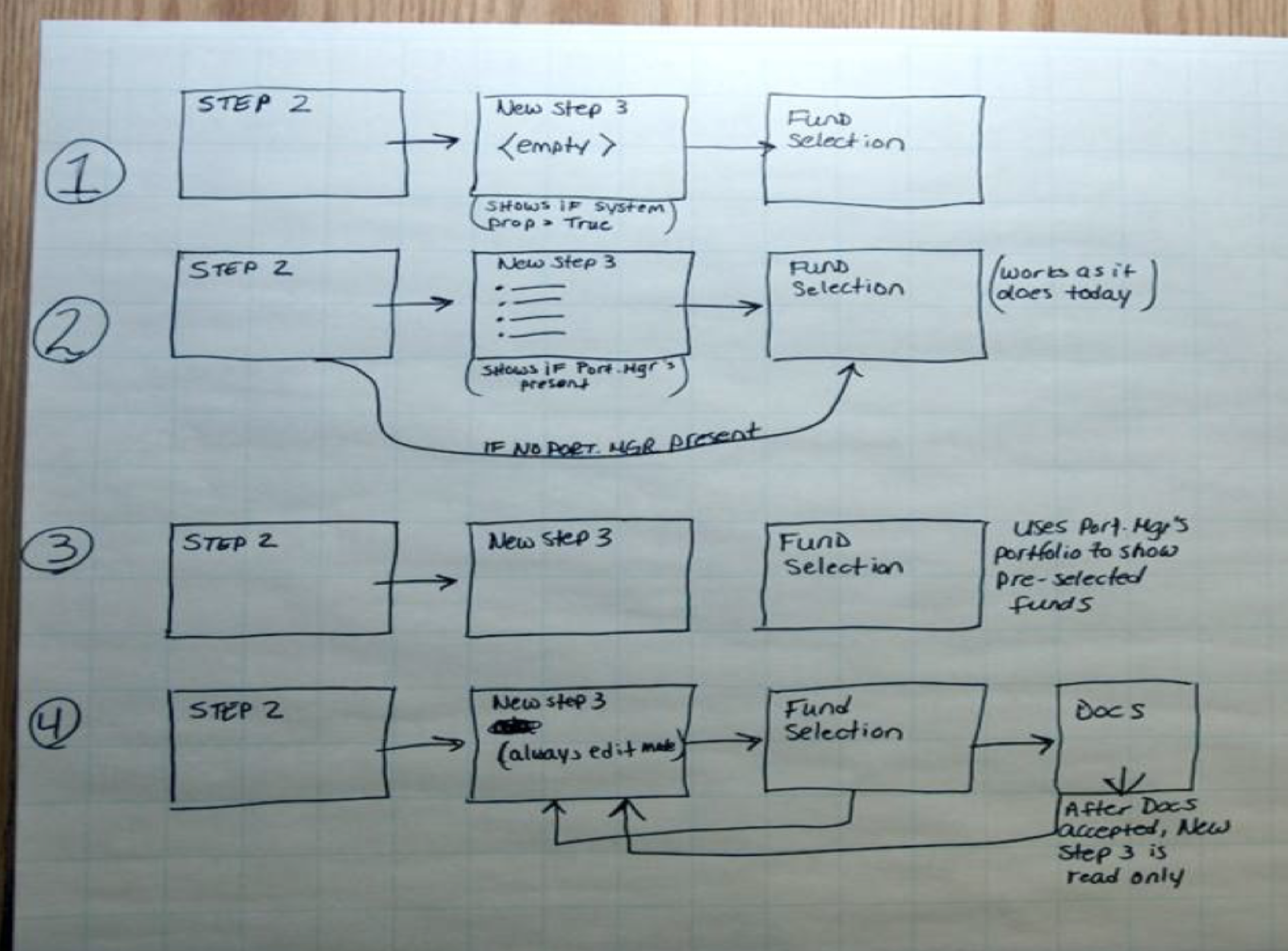

In agile development, we build features incrementally, and we can automate as we go. Here’s an example from one of my teams. We added a new step into an existing UI process. First, we simply delivered an empty page where the new step would be. It wasn’t functional, but we could demonstrate navigating to it, and we could automate a simple UI test for it. Then we added some read-only data on the step, and a way to skip over the step if it wasn’t needed. We showed this to our stakeholders, and updated our automated UI tests accordingly. We kept building onto both the production and the test code for this capability in the UI. By adding and expanding automated tests with every incremental delivery, we could avoid a scramble to automate tests at the last minute, and even better, avoid putting test automation off until a future iteration, which would add to our technical debt.

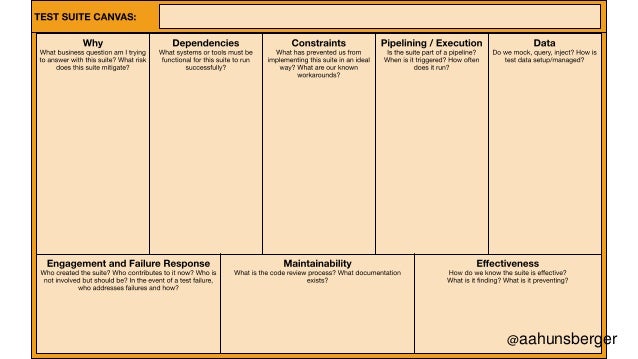

If some or all of your product isn’t yet supported by automated tests, that’s another challenge for the whole team to tackle. Nobody has time and money to automate everything, especially in the short term. I find it helpful to get a representative cross-functional group together and draw the high level system architecture on a whiteboard (real or virtual), and identify the riskiest areas. Make intelligent decisions about what to automate next. Ashley Hunsberger’s Test Suite Canvas provides a great framework for these risk-based discussions as well.

When testers, developers and other team members collaborate to automate tests as coding proceeds, there’s less waiting around to get questions answered, more expertise to write maintainable tests, and less rework. Quite a contrast to the handoffs in waterfall processes.

Applying what we learn from production

Back in the day, when we finally kicked a release out to production after months of work, we could spend a few days recuperating from all that last-minute testing and bug fixing, then slowly start our next project. That old project went completely off our radar. We didn’t give any thought to how customers were using the features we’d delivered.

Today’s technology lets us log all kinds of information about how customers use our product’s features, errors occurring in production, and even tracking eyeball movement in a user interface. Machine learning and other advanced techniques help us quickly analyze huge amounts of data. Monitoring tools alert us to problems. Teams can instrument every event in their code for observability to quickly pinpoint any production issues. Analytics tools give us amazing detail on how people use our software. All of this information feeds back into our discovery process to figure out the next valuable feature we should build. It also helps us focus our limited testing time to mitigate risks and ensure our customers enjoy features that are truly valuable to them.

I’ve collaborated with the operations folks who keep our production software up and running for my whole career, so the move to DevOps was a natural progression for me. Not all testers have had those opportunities, but now is the time to build your bridge to Ops. Collaborate with the people who carry the on-call pater, who look after security, and who plan for disaster recovery. Testing skills such as risk analysis, ability to see patterns, and asking great questions nobody else thought of are valuable for DevOps activities.

Watch those monitors, find out what customers are reporting to your support staff, and learn to use analytics tools and let production use guide your testing and development. This brings us back full circle to feature ideas. Understanding our customer needs and pain points helps us question and test those new feature ideas.

The infinite loop of continual testing is an exciting ride! Compared with what we think of as traditional over the wall testing, it’s a totally new process. Collaborate with everyone on the team to build quality in by engaging in testing activities throughout the discovery and delivery loop.

Resources for additional learning:

“Key skills modern testers need”, Interview with Janet Gregory on StickyMinds https://www.stickyminds.com/interview/key-skills-modern-testers-need-interview-janet-gregory

https://www.agileconnection.com/article/three-amigos-strategy-developing-user-stories

Discovery: Explore Behavior Using Examples, Seb Rose and Gáspár Nagy, http://bddbooks.com/

“The One Page Test Plan”, Claire Reckless, https://www.ministryoftesting.com/dojo/lessons/the-one-page-test-plan

“Monitoring and Observability”, Cindy Sridharan, 2017, https://medium.com/@copyconstruct/monitoring-and-observability-8417d1952e1c