It’s human nature to compare ourselves to others - and that’s a good thing. Competition spurs us to improve, and seeing what others have accomplished unearths new possibilities that we may have not thought of ourselves.

The DevTestOps Landscape Survey that we’ve run the last two years reveals to us what the industry is doing to ensure quality while shipping software at rapid speeds. How teams are doing so naturally depends on the size of the organization, the maturity of the development processes, the product under development, and many other factors. Still, you can evaluate how effective your development practices are against the industry as a whole and distill lessons to shape your goals for the next few years.

In this blog, we’ll share what teams are doing at a high level when it comes to test automation. If you want to dig deeper, download the full report here.

Testing tool adoption

The data for this report comes from the results of a public survey that we ran from September 2019 to March 2020 and shared with the software testing and development community via our socials, conferences, and on technical news and article platforms. This survey was opened up to all roles that affect software quality, and we received about 1030 responses from testers, developers, operations and site reliability engineers, and managers, globally.

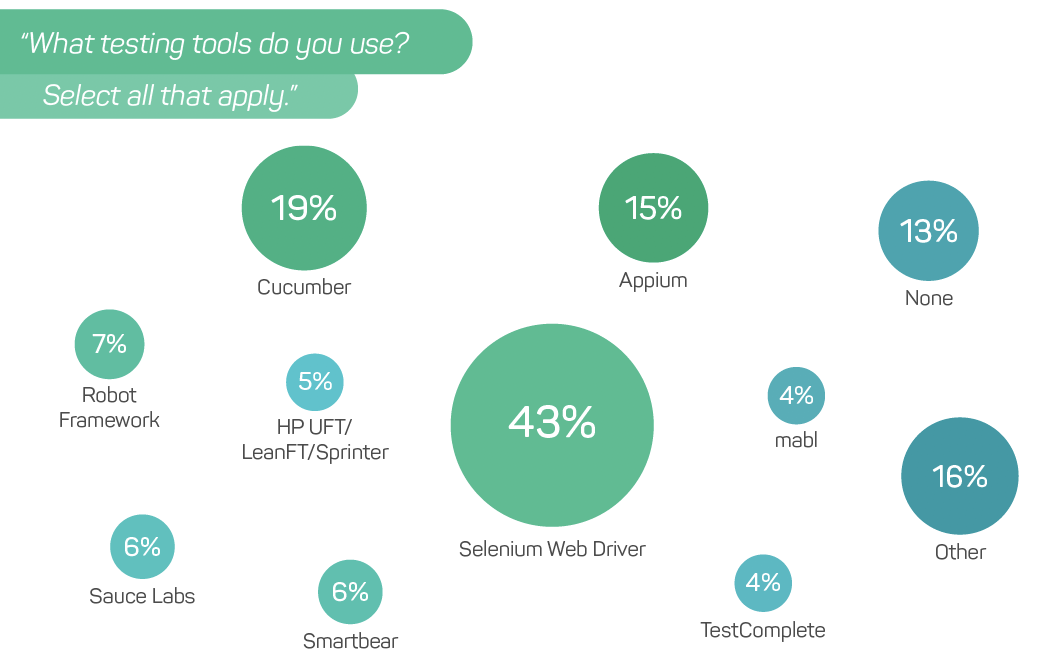

Though tools are only as good as their users, we were still curious to see what test tooling adoption looks like. To keep the charts concise, we did not list any tools that received less than 1% of responses.

Selenium Web Driver still reigns supreme, though our survey revealed that there has been a 7% drop in its usage since last year. With so many new test automation solutions now available that make UI test automation easier than using Selenium alone, it would not surprise us if selenium adoption continues to drop in the years to come.

Automation vs. manual testing

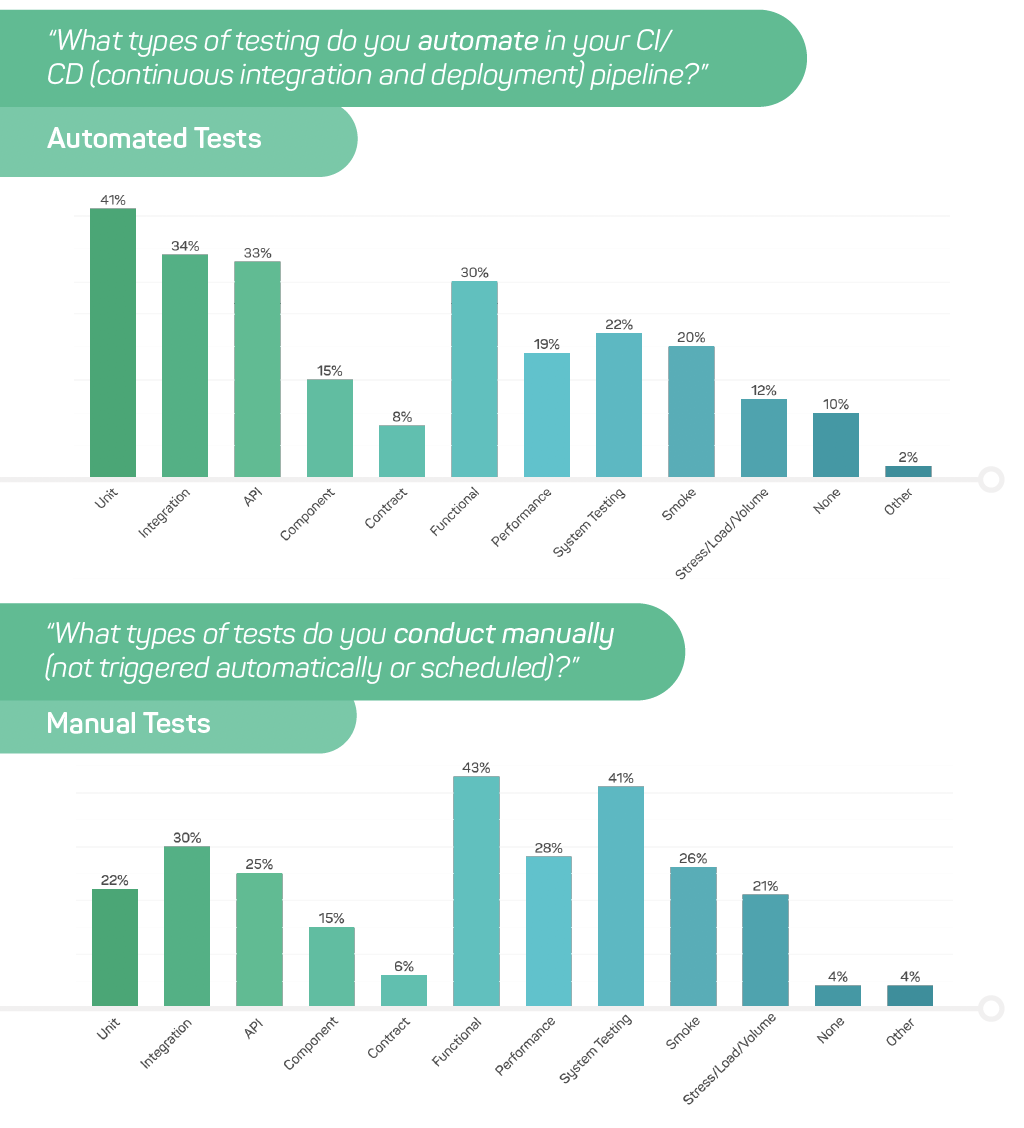

In DevOps, automation is foundational, so we compared automated tests in CI/CD with manual tests. We found that unit tests are significantly more likely to be automated and system tests are significantly more likely to be performed manually.

In general, we see a lot of automation earlier in the development lifecycle - unit, integration, and API tests. But fewer tests are automated further down the pipeline, such as system and performance tests. Many teams are beginning to understand the importance of automating tests further down the line. One team lead at a computer software company noted:

“We are thoroughly convinced in the value of automated end-to-end tests - we are working towards a world where we even require them in our PRs.“

But tests farther down the pipe take more resources to run and automating tests that may need to interact with many different components or with the UI means much more complexity when it comes to coding the test scripts with a framework based on Selenium Web Driver. When trying to implement test automation through these means, teams are faced with challenges that leave them delayed in their automation efforts, namely:

- End-to-end test automation is a specialty on its own with a specific set of frameworks and best practices

- It demands team members who are skilled in both QA and Dev - a rare breed

- Processes often create bottlenecks and silos the team because there’s not enough of that specialized skill to go around

Thus, we see a pattern where these types of tests are more likely to be done manually, despite the value of automating them. Manual testing has its purposes. It should be done when the testing at hand requires human thought patterns that can find issues outside the scope of a functional test, such as exploratory testing. Using manual testing as the core method of regression testing requires an order of magnitude more time and people than automated testing to realize the same business benefits.

How much automation should you aim for?

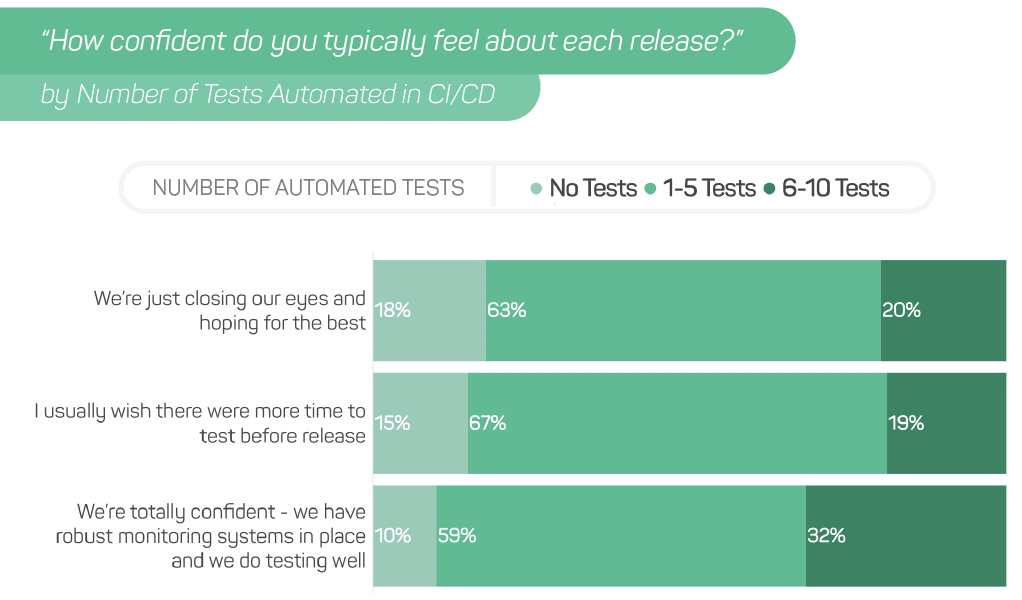

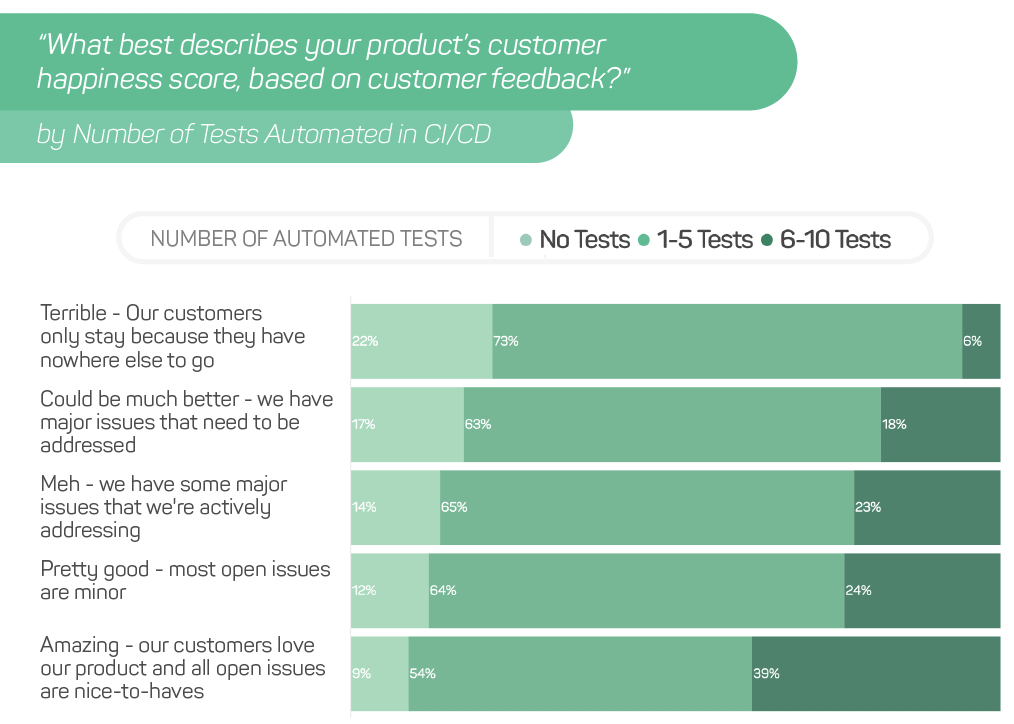

There are many different types of tests that can be conducted, but in the case of our survey, we called out 10 different types of tests. We separated respondents by the number of unique types of tests they automated in their CI/CD pipeline and compared their customer happiness levels and confidence levels for each release. In each case, respondents with 6-10 types of tests had significantly higher customer satisfaction and confidence than those with fewer than 5 types of tests.

Our survey reveals that when it comes to testing, more automation in CI/CD positively impacts the business. Knowing that we looked deeper into what exactly enabled those respondents to automate more.

The effects of cloud and CI/CD on test automation

CI/CD adoption isn’t one size fits all. CI/CD ensures that code moves smoothly between the stages of app development with ongoing, automated testing and integration. Some teams may have an automated CI pipeline between development and staging, then leave the deployment process to production as a manual process, or they may streamline the entire pipeline from development to production with CD.

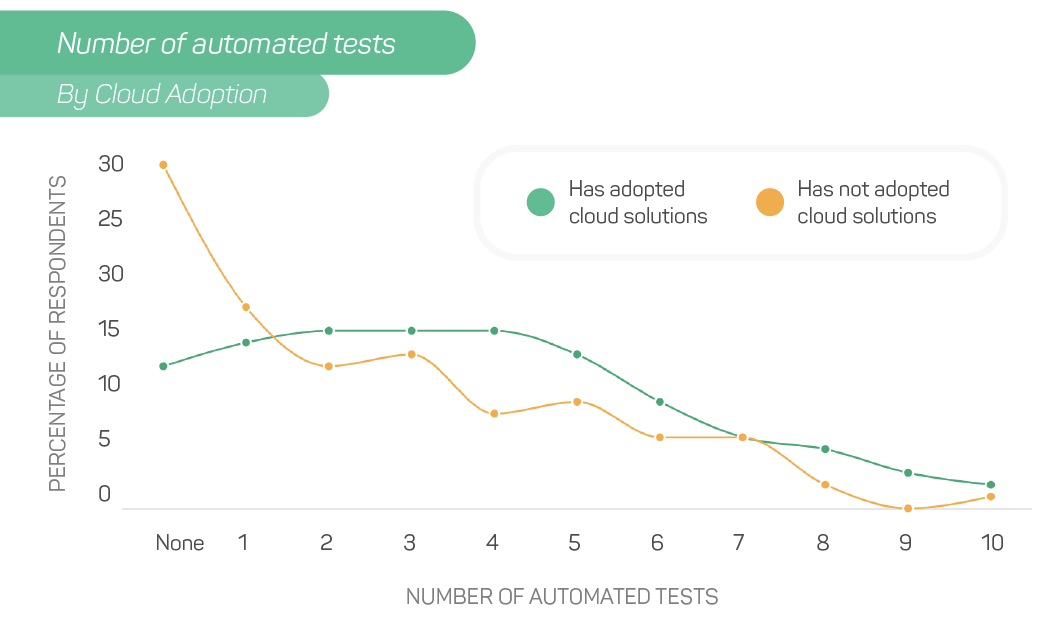

Surprisingly, according to the survey results, the adoption of continuous integration, delivery, and deployment had no impact on the number of different types of tests automated. However, we did notice that on average teams who were using some cloud technologies had more types of tests automated in their CI/CD pipeline. The benefits of adopting cloud infrastructure for its elasticity have been well-known for many years. The survey results show that cloud enables more test automation as well

Maintaining test infrastructure in-house can be a huge burden and pulls team members away from increasing test coverage and building a scalable testing strategy. Of the respondents who reported that they automate every one of the 10 types of tests, 93% of them were using cloud technology. 60% of those with 4 or more tests automated were using cloud. Of those who reported having no automated tests, 70% had not adopted any cloud infrastructure. The takeaway here is that hosting your testing infrastructure in the cloud rather than on-premises is the best way to implement test automation that will continuously scale with you.

We hope this information has given you insight into how you want to prioritize your tech transformation goals and how you’re going to incorporate testing that’s going to work in the long run. For example, maybe your organization wants to improve the speed of delivery to fix issues faster for better customer experience. Adopting continuous delivery may help, but what might be more effective is finding a cloud-based testing solution that can eliminate both the burdens of test infrastructure maintenance and the time and unique skill set required to create and run entire test suites.

Respondents with 6-10 types of tests had significantly higher customer satisfaction and confidence than those with fewer than 5 types of tests.

A cloud-based test automation solution like mabl can help your team create and run automated functional end-to-end tests quickly and easily, and glean deep quality insights such as visual and performance regressions. With no limitation on how many tests can be run in parallel, automated mabl tests can be integrated into CI/CD without causing a bottleneck in your delivery pipeline: your entire test suite can complete in the time it takes a single test to run. A cloud-first testing solution like mabl can help your team increase test coverage while you move at the speed of DevOps.

Take a look at the full report to see more of what we mapped out in this year’s DevTestOps landscape report, and keep an eye on the mabl blog as we unravel more findings in the coming weeks.