Which DevOps practices are worth adopting?

We recently ran a survey against the software testing community to understand the adoption of modern development practices and its impact on testing.

One of the things we wanted to take away from this survey was being able to identify effective DevOps practices from harmful ones. We based the success criteria on team satisfaction and customer happiness.

This post includes just snippets from the full report we produced. Head over to https://www.mabl.com/devtestops-survey-results for more findings like this.

The Respondents

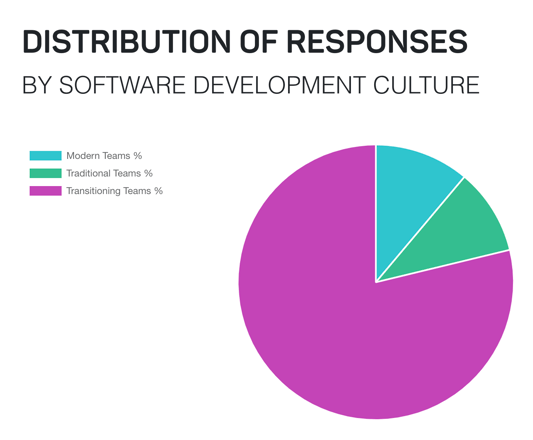

First, how did we identify DevOps teams from Traditional teams? We had a few questions tailored towards identifying the respondent, where each response was weighted more heavily if it trended towards an agile and DevOps culture. Scores were tallied up and assigned to each respondent, and the respondent’s category was finally identified based on their final score.

Traditional respondents were identified as having siloed testing roles, little to no automation, and long release cycles. Transitioning respondents had some automation and a higher release frequency. Modern respondents had many processes automated, short release cycles, and testing tasks were done by many different roles in the organization.

CI/CD Adoption

Let's look at the release pipeline. The top reasons to adopt a continuous integration and delivery pipeline is to deliver new features to users faster, have a stable operating environment, and faster resolution time for production problems. CI/CD is core to the concept of DevOps, as it involves both the dev and ops (and test) teams to agree on how to implement the pipelines that tie development and production environments together.

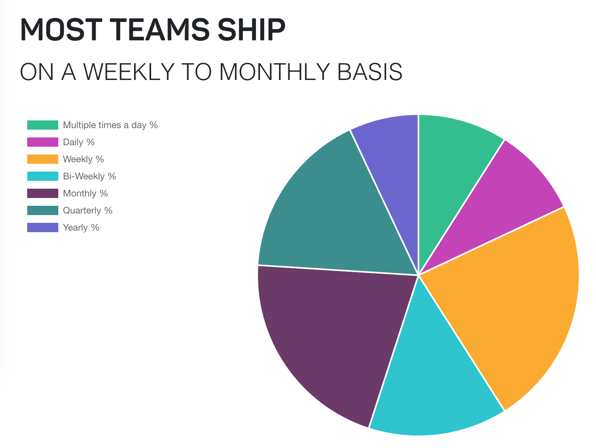

And with CI/CD you typically ship more frequently. Faster release cycles are core to both DevOps and Agile; The more frequently you ship, the shorter the feedback loop is between development and customers, and the more feedback you get.

The most popular deployment frequencies ended up being monthly and weekly, with 58% of total respondents shipping every month or less.

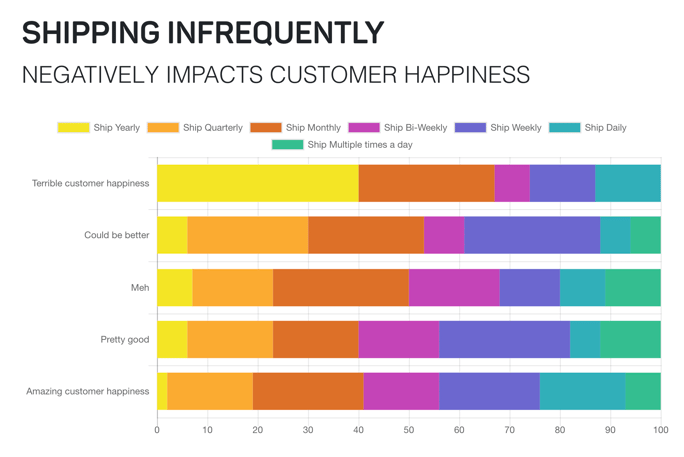

When correlated with customer happiness, the majority of teams (40%) that reported a terrible customer happiness level (categorized by “Our customers only stay because they have nowhere else to go“) reported to have a yearly deployment frequency. On the other hand, respondents who had amazing customer happiness (categorized by “Our customers love our product and all open issues are nice-to-haves“) shipped on a monthly basis. We can conclude that shipping infrequently negatively impacts customer happiness, as we might have already expected.

Conclusion: How often should you ship? Monthly or more often! ✅

Mean Time to Repair

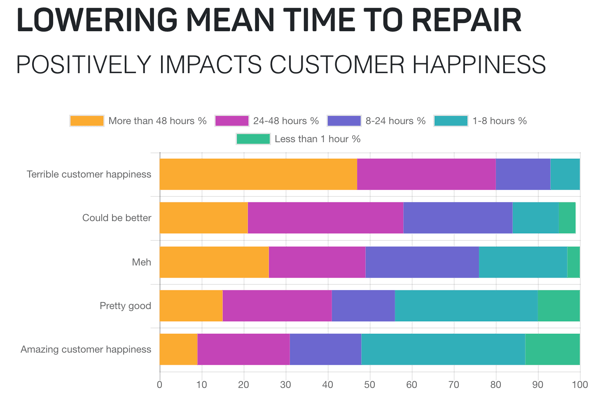

Your average MTTR (mean time to repair) isn't just a KPI. The average time required to fix a bug means the average amount of time you're leaving a bad impression on your customers. Fixing bugs fast can help repair your reputation, but it depends on if you have the release pipeline to support shipping a fix fast.

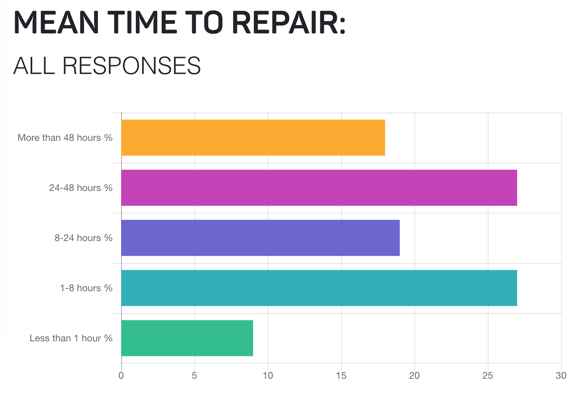

Most respondents fixed major bugs within 8 hours, or between 24-48 hours.

When comparing customer happiness levels to MTTR, those with amazing customer happiness levels fixed critical bugs within 1-8 hours of being discovered in production, and those with terrible customer happiness levels fixed critical bugs after more than 48 hours.

Conclusion: How quickly should you aim to fix production bugs? Within 1-8 hours. ✅

Test automation vs. manual testing

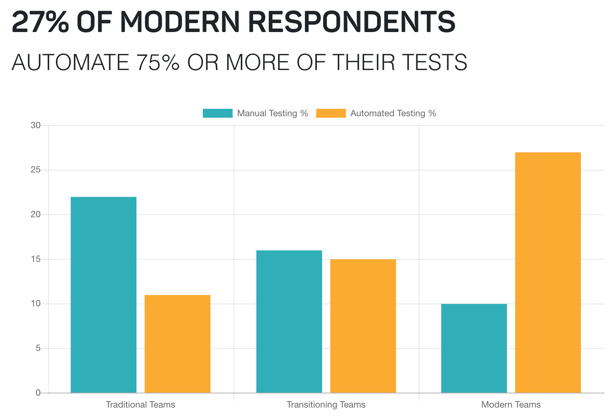

The chart below includes only respondents who conduct 75% or more their tests either manually or via automation. The ratio of automation to manual testing in traditional and modern teams nearly mirror one other. Traditional teams do 2x more manual testing than modern teams, and vice versa.

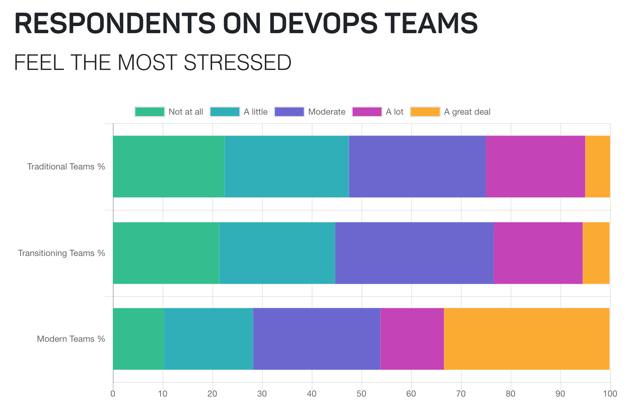

But is automating all of your tests the right thing to do? Something we've noted in our last blog is that DevOps teams tend to be more stressed than traditional or transitioning teams.

We expected that the more often teams shipped, the less stress they would feel - If releases are incremental, there should be less surprises in production, hence, less stress. But the data tells a different story:

So the increase of automation doesn’t come without a price. Though we didn't dig into it this year, we hypothesize that the heavy maintenance burden that may come from automated tests may add to the stress. The heavy maintenance on top of the shorter release cycles can easily destroy any team's morale.

Ahem, *shameless plug warning* Did you know mabl helps reduce the UI test automation burden by auto-healing your tests when your app under test changes? mabl also comes with its own testing cloud, so you can offload the operational burden too. You can try it free at mabl.com.

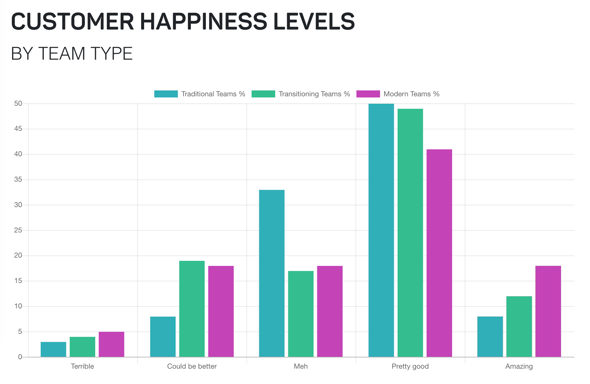

Does high stress levels pay off from a business standpoint? When looking at customer happiness levels across traditional and modern teams, the results look relatively flat across all teams:

So what seems to be more effective than simply automating everything is having a testing strategy that balances automation and manual testing.

So what seems to be more effective than simply automating everything is having a testing strategy that balances automation and manual testing.

Conclusion: How much of your tests should you automate? You shouldn't aim to automate everything. Your testing strategy should be thoughtful about what is automated, and what would be best tested with human eyes. Automate the "boring stuff" so manual testers can explore more. ✅

Conclusion

DevOps practices that paid off were shipping monthly or more and lowering your average MTTR. But it started to fail when it came to testing in DevOps.

DevOps can give you a competitive edge, but happy customers and happy teams don't seem to come about by just automating “all the things tests”. It’s about building a solid testing strategy behind it so you know what to automate, and what you still need to test manually.

Testing also can’t be seen as a stop gate, but more of a checkpoint in a never-ending race. You need to build a quality strategy for production. That's where quality counts the most.

Read the full report at https://www.mabl.com/devtestops-survey-results for even more findings that will help your team in your shift to DevOps.