Staging is the last phase in the deployment process before releasing to Production. While most of the detailed, time consuming testing that ensured that all the parts of the application worked to specification was done in the previous stage, Integration Testing, there is still more to be done. Staging is the final dress rehearsal before the release to Production.

Creating the Staging Environment

A critical factor in Staging and Deployment testing is that the computing environment in which testing is to take place must match the Production environment as is reasonably possible. This means that machine configurations between Staging and Production must match up. In addition, server software, database and storage resources must match as well. If you're deployment testing in a hosted environment, getting the staging environment up usually involves no more than creating some temporary virtual machines or container deployments. Companies that have their computing resources on premise and work directly with hardware will have a harder time creating the Staging environment. Making Staging hardware match its Production counterpart can be time consuming and expensive. The same is true of software configuration. The expense of using premises, bare metal resources can be motivation enough to move to a virtualized environment. Standing up VMs, containers and device emulators is a whole lot easier and cheaper than having to acquire and provision boxes, tablets and cell phones. This is one of the reasons Amazon released their AWS Device Farm service.

Companies that are deployment testing and working in the cloud using a service provider such as AWS, Azure or Google Cloud have an easier time creating the staging environment. Typically, the effort is no more than executing scripts that provision the necessary computing environments, do environment orchestration and then inject the initial data and files into the relevant data and file storage services. This sort of ephemeral provisioning provides a reliable approach to creating apples-to-apples environments when performing deployment testing in the Staging process. Also, ephemeral provisioning is cost effective in that the expense of the testing environment is incurred only when the test is being run. The best practice is that once staging tests are run, the environments are destroyed until the next testing session. Once a test environment is destroyed, companies are not paying for very expensive test environments that are not being used.

Testing in the Staging Environment

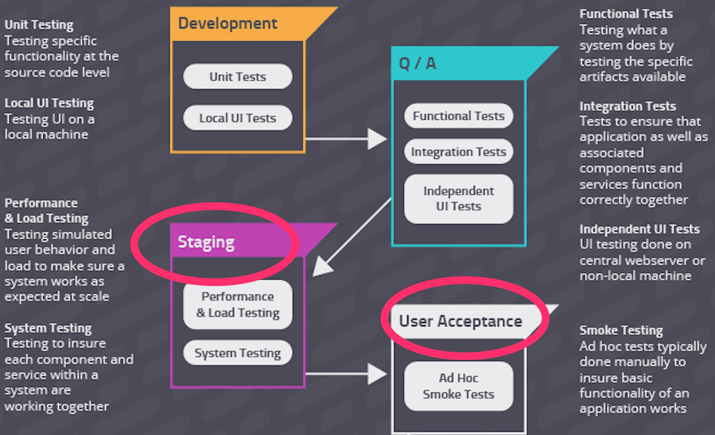

Once the staging environment is set, the next step is to run the tests. As mentioned above, the heavy lifting was done in Integration Testing. The scope of tests in Staging is to ensure the application under test meets business requirements and expectations. Thus, the usual tests performed are smoke tests and user acceptance testing (UAT).

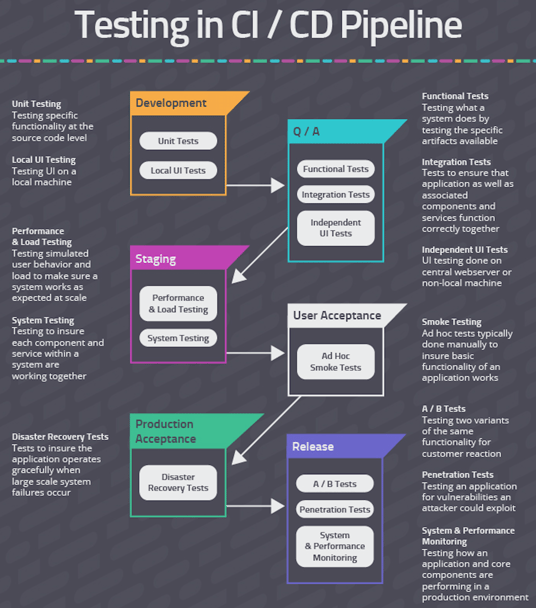

Staging and User Acceptance tests in the CI/CD Dev Lifecycle

Smoke Testing

A smoke test, also known as a sanity test, is a fast test that is done by a script or human that ensures that the application under test works to minimal expectation. For example, a human run smoke test involves logging into the app and doing some usual activities such as conduct a search or exercise a standard feature. This is down and dirty testing. The purpose is to make sure that the usual and obvious features work as expected. The assumption is that if the primary features work, so should the more complex ones in the application’s feature stack. Of course, this all is based on the assumption that rigorous, time consuming testing was done back in Integration.

User Acceptance Testing

User Acceptance Testing (UAT) is focused on the business. Software doesn't appear by magic. There is a buying party, either internal to the company or an external customer, Their needs must be satisfied. UAT is the place where the business confirms that the application meets expectation. Typically a tester in UAT is a business user or product manager. In addition to confirming the basic functionality works as expected, the tester will confirm that the application’s presentation meets branding, locale and style standards. For example, this means ensuring the the proper fonts are used, that the graphics meet company standards and that the overall usability of the product is acceptable.

Again, Staging is the last test session before production release. Thus, making sure that the business is satisfied with the code that is about to be released for public consumption is a paramount concern.

The Value of the A/B Approach to Production Release

In the old days of waterfall testing, all the features of an application were released at once and the test process was hierarchical-- code to test to deployment. If the test failed, it went all the back in the release chain. Today we take a different approach. We are in a continuous release cycle, in which we ship one small feature at the time and test in an iterative fashion.

The notion of continuous, pre-production testing has spilled over into Production release. Whereas in the past the release of a new version of code replaced all the the previous code, today we often release a new version to a small segment of the user base and then, if all goes well, expand that user base until the new version has 100% release penetration. For example, for every 100 requests to a website, 10 of those users will be directed to the new version of code, while the remaining 90 are direct to the old version. This technique is called A/B testing.

A/B testing is useful in a variety of ways. First, the only true test environment of software is the real world. All the testing that comes before release is based on supposition. While pre-release testing will address most issues, it won’t address every operational issue, particularly when it comes to code that is subject to the whimsy of human interaction. Thus, releasing code to a subset of all users provides the random interaction required for real world testing without opening up the entire user base to a potentially bad experience.

The second benefit is one of comparative analysis. Remember, in an A/B test situation, both versions of code are fully operational. Usage data is being gathered. Once data is gathered, analysts can measure and compare the usage results. A/B testing might reveal that users are spending less time engaged with the new code as opposed to the previous version. Or that overall performance might be slower. Once these patterns come to light, it’s relatively easy to revert all web traffic to the older version until the issues of the newer version can be addressed.

Putting It All Together

Staging is an important part of the deployment testing process. It’s the place where technology and business come together. Although the operational testing process is nowhere near as extensive as that of Integration Testing, the insights gathered from the tests performed by business oriented testers provide important information about the overall viability of the software about to be released. Most software is created to meet a real need and those needs are usually best known by the folks running the business. Their acceptance counts. However, there is one group whose acceptance counts even more. Those are the intended users of the code. Incorporating A/B as part of the release cycle provides the additional insights necessary to making code that counts. Making code that counts is what it’s all about.