As explained in the 2018 State of DevOps Report, high-performing software teams use key technical practices such as continuous testing, monitoring, and observability. We’re trying to shorten that learning loop. As a developer about to check in changes for a new feature, wouldn’t it be nice to know before you commit whether the changes will behave as desired, and whether they will break something else in the application? That would be the ultimate feedback loop!

Getting feedback

Part of continuous testing is having automated test suites at various levels, such as unit, API, UI, testing various quality attributes, such as functionality, security, accessibility, performance. We run these tests in our pipelines. Unit-level tests give us quick feedback. Feedback from UI tests takes longer, but gives us more confidence in our product.

Another part of continuous testing is continually exploring the product to learn whether it always behaves as expected in different user scenarios. This includes evaluating the various quality attributes such as the ones mentioned above. Learning from manual exploratory testing activities takes longer, and for teams practicing continuous delivery, probably occurs asynchronously. We use monitoring and observability to learn about production issues as quickly as possible, and rely on our pipelines to recover from failures quickly. Continuous delivery practices such as feature toggling help us manage the risk that we may not discover a problem until it’s in production.

Succeeding with quality

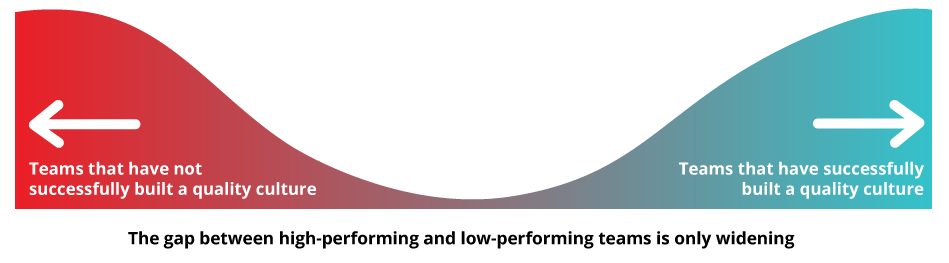

The State of DevOps survey reveals practices that produce elite-performing teams, but also shows that the gap between teams that successfully build a quality culture and those who do not is always widening. At the same time, there’s all this buzz about artificial intelligence and machine learning. Can machine learning help less successful teams close the gap? Would it enable successful teams to shorten their learning cycles even more, so they can innovate even faster?

There’s not a simple answer. The attitudes, cultures, and funding of software delivery teams have more to do with their ability to perform well than specific tools and practices. We know it’s essential to hire good people and then support them to do their best work and continually improve. Will lower-performing teams even try to learn about AI and ML and what it might do for them? Conversely, will higher-performing teams wait to see if AI and ML live up to the hype before investing time to experiment with them? And for those of us who embrace these new capabilities, might we go down the wrong road because our models and algorithms are flawed?

I’ve been talking to various people about ways they wish machine learning could help with testing. These conversations were mostly focused on test automation, but that’s just part of a bigger picture - how might ML speed up our testing, shorten those feedback loops, free up time to spend on more valuable testing activities? We want machine learning to help us find out sooner what impact a new change will have on our team, our customers, our business. Let’s look at ways machine learning might help us humans learn faster.

Cutting down on time spent maintaining tests

A common response to my question is “reduce the time we waste analyzing test failures and maintaining tests.” It seems that lots of teams still struggle with “flaky tests”, false positives, false negatives, mysterious test failures (“Why does it fail only in Safari…”). My own previous team was high-performing by any definition. We built a solid infrastructure for continuous delivery, monitoring, and analytics. We had thousands of automated tests at the unit level (practicing TDD), API level and UI level. And we spent a large percentage of our time investigating test failures, and updating tests to make them more reliable.

Applying machine learning to make tests self-maintaining is an obvious use of ML. mabl’s auto-healing capabilities aim to achieve this goal. We’re continually experimenting with more ways to optimize the algorithms mabl uses to identify the target element with which each step of a journey needs to interact. There are many other factors to creating maintainable automated tests, such as using good practices and patterns. Still, the ability for a UI test to learn what has changed on the page and be able to continue executing properly is a huge time-saver.

Perhaps those same benefits can be applied to lower-level tests as well. Automating tests at the API or service level is generally less onerous than through the UI, but those tests also require maintenance as the application changes. For example, we could use ML to pick up on new parameters for API endpoints and add new automated tests to cover them.

ML is good at learning from huge amounts of data. Analyzing test failures to detect patterns seems like a potential strength of ML. It might even help us detect potential problems before any test assertions fail. Kind of like the canary in a coal mine, detecting “smells” in the code. That improves our chances of figuring out which change introduced the issue so we can fix it faster.

More valuable tests

It might be possible to instrument production and test code so that it not only gives meaningful error messages, but logs all the information about an event associated with a failure. All that information would be hard for us humans to process, but if ML can help us get to the meaningful answers, we’ll spend less time analyzing test failures. We might also be able to use that data for future automated tests.

There may be other ways ML can help us design more reliable tests that don’t cause wasted time. Could it help us design better tests, perhaps detect anti-patterns in our test code? Maybe we could use ML to discover which tests could be pushed to a lower level, or parts of the system that could be safely faked or mocked out for a test to make it run faster.

Running tests concurrently in the cloud as mabl does speeds up our CI pipelines, but end-to-end tests running in a browser are slower than tests that run “below” or in place of the browser. It’d be helpful to get extra guidance. For example, if the automation tool told us, “These 77 UI tests post to the gravity-drive endpoint”, we might decide to test that endpoint directly via the API instead.

A common wish is to use ML to suggest which tests are most important to automate and even to automatically generate those tests. This could be based on analysis of production code to identify risky areas. An obvious potential use of ML is analysis of production usage to identify possible user scenarios and suggest or automatically create automated test cases to cover them. Optimizing the time we spend automating tests by intelligently automating the most valuable ones would be a big win.

Using ML to analyze production use could help us identify more user flows through the application and gather details we could use to emulate production use more accurately for performance, security, accessibility and other types of testing. And since we will have more time for exploratory testing thanks to our reliable, non-flaky automated tests, learning about edge case user flows could lead us to more effective exploratory testing of all these different quality attributes.

All this means we could gain a deeper understanding about our product in a much shorter time frame.

Test data

Creating and maintaining production-like data on which automated tests can operate has been a pain point for me and my teams. I’ve often seen serious issues go undetected into production because the problematic edge case required a combination of data that we didn’t have in our test environment. What if we could use ML to identify a comprehensive, representative sample of production data, scrub it to remove any privacy concerns, and create canonical sets of test data to be used by both automated test suites and for manual exploratory testing?

Yes, if we instrument our production code and log comprehensive data about events, and set up good monitoring and alerts in production, we can recover quickly from production failures. Reducing mean time to recovery (MTTR) is a good goal, and low MTTR is associated with high-performing teams. In domains with high-impact risks such as life-critical applications, we may still want to use techniques like exploratory testing - in an environment as much like production as we can - to reduce the chance of failures. Though nothing will look or behave like production except production!

Things humans may not do so well

It depends on your context, of course, but for most software applications, it’s not advisable to try to automate every bit of testing. We need our human eyes and other senses, we need our critical and lateral thinking skills to learn what risks lurk in our software and what features are still missing.

That said, it’s important to automate the boring stuff so that we can do a better job with the interesting testing. One of the first applications of ML in test automation has been visual checking. For example, mabl uses screenshots of each page visited in the automated functional journeys to build visual models. It learns what parts of each page should change, such as carousels, ad banners, or date and time information, and ignores changes in those areas. It intelligently provides alerts when it detects visual differences in the areas that should always look exactly the same.

If you’ve ever done visual checking yourself by staring at a UI page in production side by side with the same one in the test environment, you know how soul-crushing this work can be. I’m all for letting the software robots do the tedious, repetitive work, and let us know when it’s time for us to put on our thinking caps and bring on all our brain power to dive into potential failures.

Other quality attributes

For many software products, functionality takes a back seat to other quality attributes such as performance, reliability, accessibility and security. Can machine learning help us with those? mabl uses ML to detect anomalies in page load time and test execution time. ML could help automate testing of more detailed performance measures, such as event processing, response time, and animation in UI pages.

Some people are starting to use ML, including supervised learning, image recognition, and natural language processing to improve the effectiveness of automated accessibility testing. Books explaining how to use ML for penetration testing, as well as security testing tools using ML, are already available. And here again, our ability to do more in-depth analysis of production use data with ML could help us identify more ways that our applications may be vulnerable to security exploits or create accessibility issues for some customers.

No more unknown unknowns?

What if ML could learn everything about our product and how it is used by people or by other systems, understand the connections between different areas of production code, how they impact each other? Maybe the future of ML includes this scenario: before a developer commits a code change, she can determine whether the change will work as expected and whether it will cause some other part of the application to start misbehaving. We won’t even have to wait for our CI to run all our automated regression tests, or wait until we get some analytics from our production monitoring. Now that is a fast learning loop.

As we journey towards this Utopia of instant knowledge, we can build better tools that use ML to do a lot of the work for us and free up our time to experiment with more innovations. At mabl we put the time that ML frees up to good use, thinking up more features that will save us more time and provide us more information more quickly. ML can help us all do a better job of shortening feedback loops in our delivery pipelines and learning from continuous testing. The end result is frequent delivery of new valuable features for our customers, at a sustainable pace. ML gives us more in our toolbelt to succeed with agile and DevOps values, principles and practices.